Uncategorised

- Position:

- Department of Information Engineering

- Via G.B. Tiepolo 85

- Padova

- PD

- 35129

- Italy

- +39-049-827-7831

- http://robotics.dei.unipd.it

2021

Stefano Tortora, Roberto Sassi, Ruggero Carli and Emanuele Menegatti. Weighted Shared-Autonomy with Assistance-to-Target and Collision Avoidance for Intelligent Assistive Robotics. In Lecture Notes in Networks and Systems 412. 2022, 580–593. URL, DOI BibTeX

@conference{10.1007/978-3-030-95892-3_44, id = "10.1007/978-3-030-95892-3_44", pages = "580--593", isbn = "978-3-030-95891-6", doi = "10.1007/978-3-030-95892-3_44", url = "https://link.springer.com/chapter/10.1007/978-3-030-95892-3_44", keywords = "Assistive robotics; Collision avoidance; Human-robot interaction; Robotic manipulator; Shared-autonomy", abstract = "Intelligent Assistive Robotics (IAR) has been recently introduced as a branch of Service Robotics developing semi-autonomous robots helping people with physical disability in daily-living activities. Literature often focuses on the development of assistive robots with a single semi-autonomous behavior, while the integration of multiple assistance is rarely considered. In this paper, we propose a novel shared-autonomy controller integrating the contribution of two semi-autonomous behavioral modules: an assistance-to-target module, adjusting user’s input to simplify the target reaching, and a collision avoidance module, moving the robot away from trajectories leading to possible collisions with obstacles. An arbitration function based on the risk of collision is introduced to prevent conflicts between the two behaviors. The proposed controller has been successfully evaluated both offline and online in a reach-to-grasp task with a simulated robotic manipulator. Results show that the proposed methods significantly reduced not only the time to complete the task with respect to the pure teleoperation or controllers including just one semi-autonomous behavior, but also the user’s workload controlling the manipulator with a wearable interface. The context-awareness employed by the IAR may increase the reliability of the human-robot interaction, pushing forward the use of this technology in complex environments to assist disabled people at home.", booktitle = "Lecture Notes in Networks and Systems", volume = 412, year = 2022, title = "Weighted Shared-Autonomy with Assistance-to-Target and Collision Avoidance for Intelligent Assistive Robotics", author = "Tortora, Stefano and Sassi, Roberto and Carli, Ruggero and Menegatti, Emanuele" }Daniel Fusaro, Emilio Olivastri, Daniele Evangelista, Marco Imperoli, Emanuele Menegatti and Alberto Pretto. Pushing the Limits of Learning-based Traversability Analysis for Autonomous Driving on CPU. In Proceedings of INTERNATIONAL CONFERENCE ON INTELLIGENT AUTONOMOUS SYSTEMS (IAS-17). 2022. DOI BibTeX

@conference{10.48550/arXiv.2206.03083, id = "10.48550/arXiv.2206.03083", doi = "10.48550/arXiv.2206.03083", booktitle = "Proceedings of INTERNATIONAL CONFERENCE ON INTELLIGENT AUTONOMOUS SYSTEMS (IAS-17)", year = 2022, title = "Pushing the Limits of Learning-based Traversability Analysis for Autonomous Driving on CPU", author = "Fusaro, Daniel and Olivastri, Emilio and Evangelista, Daniele and Imperoli, Marco and Menegatti, Emanuele and Pretto, Alberto" }M Yoshida, G Giruzzi, N Aiba, J F Artaud, J Ayllon-Guerola, L Balbinot, O Beeke, E Belonohy, P Bettini, W Bin, A Bierwage, T Bolzonella, M Bonotto, C Boulbe, J Buermans, M Chernyshova, S Coda, R Coelho, S Davis, C Day, G De Tommasi, M Dibon, A Ejiri, G Falchetto, A Fassina, B Faugeras, L Figini, M Fukumoto, S Futatani, K Galazka, J Garcia, M Garcia-Munoz, L Garzotti, L Giacomelli, L Giudicotti, S Hall, N Hayashi, C Hoa, M Honda, K Hoshino, M Iafrati, A Iantchenko, S Ide, S Iio, R Imazawa, S Inoue, A Isayama, E Joffrin, K Kamiya, Y Ko, M Kobayashi, T Kobayashi, G Kocsis, A Kovacsik, T Kurki-Suonio, B Lacroix, P Lang, P Lauber, A Louzguiti, E De La Luna, G Marchiori, M Mattei, A Matsuyama, S Mazzi, A Mele, F Michel, Y Miyata, J Morales, P Moreau, A Moro, T Nakano, M Nakata, E Narita, R Neu, S Nicollet, M Nocente, S Nowak, F P Orsitto, V Ostuni, Y Ohtani, N Oyama, R Pasqualotto, B Pegourie, E Perelli, L Pigatto, C Piccinni, A Pironti, P Platania, B Ploeckl, D Ricci, P Roussel, G Rubino, R Sano, K Sarkimaki, K Shinohara, S Soare, C Sozzi, S Sumida, T Suzuki, Y Suzuki, T Szabolics, T Szepesi, Y Takase, M Takech, N Tamura, K Tanaka, H Tanaka, M Tardocchi, A Terakado, H Tojo, T Tokuzawa, A Torre, N Tsujii, H Tsutsui, Y Ueda, H Urano, M Valisa, M Vallar, J Vega, F Villone, T Wakatsuki, T Wauters, M Wischmeier, S Yamoto and L Zani. Plasma physics and control studies planned in JT-60SA for ITER and DEMO operations and risk mitigation. PLASMA PHYSICS AND CONTROLLED FUSION 64, 2022. DOI BibTeX

@article{10.1088/1361-6587/ac57a0, id = "10.1088/1361-6587/ac57a0", doi = "10.1088/1361-6587/ac57a0", keywords = "JT-60SA; plasma control; risk mitigation; scenario development", abstract = "A large superconducting machine, JT-60SA has been constructed to provide major contributions to the ITER program and DEMO design. For the success of the ITER project and fusion reactor, understanding and development of plasma controllability in ITER and DEMO relevant higher beta regimes are essential. JT-60SA has focused the program on the plasma controllability for scenario development and risk mitigation in ITER as well as on investigating DEMO relevant regimes. This paper summarizes the high research priorities and strategy for the JT-60SA project. Recent works on simulation studies to prepare the plasma physics and control experiments are presented, such as plasma breakdown and equilibrium controls, hybrid and steady-state scenario development, and risk mitigation techniques. Contributions of JT-60SA to ITER and DEMO have been clarified through those studies.", volume = 64, journal = "PLASMA PHYSICS AND CONTROLLED FUSION", publisher = "IOP Publishing Ltd", year = 2022, title = "Plasma physics and control studies planned in JT-60SA for ITER and DEMO operations and risk mitigation", author = "Yoshida, M. and Giruzzi, G. and Aiba, N. and Artaud, J. F. and Ayllon-Guerola, J. and Balbinot, L. and Beeke, O. and Belonohy, E. and Bettini, P. and Bin, W. and Bierwage, A. and Bolzonella, T. and Bonotto, M. and Boulbe, C. and Buermans, J. and Chernyshova, M. and Coda, S. and Coelho, R. and Davis, S. and Day, C. and De Tommasi, G. and Dibon, M. and Ejiri, A. and Falchetto, G. and Fassina, A. and Faugeras, B. and Figini, L. and Fukumoto, M. and Futatani, S. and Galazka, K. and Garcia, J. and Garcia-Munoz, M. and Garzotti, L. and Giacomelli, L. and Giudicotti, L. and Hall, S. and Hayashi, N. and Hoa, C. and Honda, M. and Hoshino, K. and Iafrati, M. and Iantchenko, A. and Ide, S. and Iio, S. and Imazawa, R. and Inoue, S. and Isayama, A. and Joffrin, E. and Kamiya, K. and Ko, Y. and Kobayashi, M. and Kobayashi, T. and Kocsis, G. and Kovacsik, A. and Kurki-Suonio, T. and Lacroix, B. and Lang, P. and Lauber, P. and Louzguiti, A. and De La Luna, E. and Marchiori, G. and Mattei, M. and Matsuyama, A. and Mazzi, S. and Mele, A. and Michel, F. and Miyata, Y. and Morales, J. and Moreau, P. and Moro, A. and Nakano, T. and Nakata, M. and Narita, E. and Neu, R. and Nicollet, S. and Nocente, M. and Nowak, S. and Orsitto, F. P. and Ostuni, V. and Ohtani, Y. and Oyama, N. and Pasqualotto, R. and Pegourie, B. and Perelli, E. and Pigatto, L. and Piccinni, C. and Pironti, A. and Platania, P. and Ploeckl, B. and Ricci, D. and Roussel, P. and Rubino, G. and Sano, R. and Sarkimaki, K. and Shinohara, K. and Soare, S. and Sozzi, C. and Sumida, S. and Suzuki, T. and Suzuki, Y. and Szabolics, T. and Szepesi, T. and Takase, Y. and Takech, M. and Tamura, N. and Tanaka, K. and Tanaka, H. and Tardocchi, M. and Terakado, A. and Tojo, H. and Tokuzawa, T. and Torre, A. and Tsujii, N. and Tsutsui, H. and Ueda, Y. and Urano, H. and Valisa, M. and Vallar, M. and Vega, J. and Villone, F. and Wakatsuki, T. and Wauters, T. and Wischmeier, M. and Yamoto, S. and Zani, L." }L Tonin, G Beraldo, S Tortora and E Menegatti. ROS-Neuro: An Open-Source Platform for Neurorobotics. FRONTIERS IN NEUROROBOTICS 16, 2022. DOI BibTeX

@article{10.3389/fnbot.2022.886050, id = "10.3389/fnbot.2022.886050", doi = "10.3389/fnbot.2022.886050", keywords = "brain-machine interface; neural interface; neurorobotics; ROS; ROS-Neuro", abstract = "The growing interest in neurorobotics has led to a proliferation of heterogeneous neurophysiological-based applications controlling a variety of robotic devices. Although recent years have seen great advances in this technology, the integration between human neural interfaces and robotics is still limited, making evident the necessity of creating a standardized research framework bridging the gap between neuroscience and robotics. This perspective paper presents Robot Operating System (ROS)-Neuro, an open-source framework for neurorobotic applications based on ROS. ROS-Neuro aims to facilitate the software distribution, the repeatability of the experimental results, and support the birth of a new community focused on neuro-driven robotics. In addition, the exploitation of Robot Operating System (ROS) infrastructure guarantees stability, reliability, and robustness, which represent fundamental aspects to enhance the translational impact of this technology. We suggest that ROS-Neuro might be the future development platform for the flourishing of a new generation of neurorobots to promote the rehabilitation, the inclusion, and the independence of people with disabilities in their everyday life.", volume = 16, journal = "FRONTIERS IN NEUROROBOTICS", publisher = "Frontiers Media S.A.", year = 2022, title = "ROS-Neuro: An Open-Source Platform for Neurorobotics", author = "Tonin, L. and Beraldo, G. and Tortora, S. and Menegatti, E." }D Fusaro, E Olivastri, D Evangelista, P Iob and A Pretto. An Hybrid Approach to Improve the Performance of Encoder-Decoder Architectures for Traversability Analysis in Urban Environments. In 2022 IEEE Intelligent Vehicles Symposium (IV) 2022-June. 2022, 1745–1750. DOI BibTeX

@conference{10.1109/IV51971.2022.9827248, id = "10.1109/IV51971.2022.9827248", pages = "1745--1750", isbn = "978-1-6654-8821-1", doi = "10.1109/IV51971.2022.9827248", booktitle = "2022 IEEE Intelligent Vehicles Symposium (IV)", volume = "2022-June", year = 2022, title = "An Hybrid Approach to Improve the Performance of Encoder-Decoder Architectures for Traversability Analysis in Urban Environments", author = "Fusaro, D. and Olivastri, E. and Evangelista, D. and Iob, P. and Pretto, A." }Loris Nanni, Sheryl Brahnam, Michelangelo Paci and Stefano Ghidoni. Comparison of Different Convolutional Neural Network Activation Functions and Methods for Building Ensembles for Small to Midsize Medical Data Sets. SENSORS 22, 2022. DOI BibTeX

@article{10.3390/s22166129, id = "10.3390/s22166129", doi = "10.3390/s22166129", keywords = "MeLU variants; activation functions; biomedical classification; convolutional neural networks; ensembles; Diagnostic Imaging; Neural Networks, Computer", abstract = "CNNs and other deep learners are now state-of-the-art in medical imaging research. However, the small sample size of many medical data sets dampens performance and results in overfitting. In some medical areas, it is simply too labor-intensive and expensive to amass images numbering in the hundreds of thousands. Building Deep CNN ensembles of pre-trained CNNs is one powerful method for overcoming this problem. Ensembles combine the outputs of multiple classifiers to improve performance. This method relies on the introduction of diversity, which can be introduced on many levels in the classification workflow. A recent ensembling method that has shown promise is to vary the activation functions in a set of CNNs or within different layers of a single CNN. This study aims to examine the performance of both methods using a large set of twenty activations functions, six of which are presented here for the first time: 2D Mexican ReLU, TanELU, MeLU + GaLU, Symmetric MeLU, Symmetric GaLU, and Flexible MeLU. The proposed method was tested on fifteen medical data sets representing various classification tasks. The best performing ensemble combined two well-known CNNs (VGG16 and ResNet50) whose standard ReLU activation layers were randomly replaced with another. Results demonstrate the superiority in performance of this approach.", volume = 22, journal = "SENSORS", year = 2022, title = "Comparison of Different Convolutional Neural Network Activation Functions and Methods for Building Ensembles for Small to Midsize Medical Data Sets", author = "Nanni, Loris and Brahnam, Sheryl and Paci, Michelangelo and Ghidoni, Stefano" }A Gottardi, S Tortora, E Tosello and E Menegatti. Shared Control in Robot Teleoperation With Improved Potential Fields. IEEE TRANSACTIONS ON HUMAN-MACHINE SYSTEMS, pages 1–13, 2022. URL, DOI BibTeX

@article{10.1109/THMS.2022.3155716, id = "10.1109/THMS.2022.3155716", pages = "1--13", doi = "10.1109/THMS.2022.3155716", url = "https://ieeexplore.ieee.org/abstract/document/9734752", keywords = "Aerospace electronics; Artificial potential fields (APFs) le2; Collision avoidance; collision avoidance; Hidden Markov models; human–robot interaction; Real-time systems; Robots; shared control; soft constraint satisfaction problem (CSP); Task analysis; teleoperation; Trajectory", abstract = "In shared control teleoperation, the robot assists the user in accomplishing the desired task. Rather than simply executing the user’s command, the robot attempts to integrate it with information from the environment, such as obstacle and/or goal locations, and it modifies its behavior accordingly. In this article, we propose a real-time shared control teleoperation framework based on an artificial potential field approach improved by the dynamic generation of escape points around the obstacles. These escape points are virtual attractive points in the potential field that the robot can follow to overcome the obstacles more easily. The selection of which escape point to follow is done in real time by solving a soft-constrained problem optimizing the reaching of the most probable goal, estimated from the user’s action. Our proposal has been extensively compared with two state-of-the-art approaches in a static cluttered environment and a dynamic setup with randomly moving objects. Experimental results showed the efficacy of our method in terms of quantitative and qualitative metrics. For example, it significantly decreases the time to complete the tasks and the user’s intervention, and it helps reduce the failure rate. Moreover, we received positive feedback from the users that tested our proposal. Finally, the proposed framework is compatible with both mobile and manipulator robots.", journal = "IEEE TRANSACTIONS ON HUMAN-MACHINE SYSTEMS", publisher = "Institute of Electrical and Electronics Engineers Inc.", year = 2022, title = "Shared Control in Robot Teleoperation With Improved Potential Fields", author = "Gottardi, A. and Tortora, S. and Tosello, E. and Menegatti, E." }Gloria Beraldo, Luca Tonin, José R Millán and Emanuele Menegatti. Shared Intelligence for Robot Teleoperation via BMI. IEEE TRANSACTIONS ON HUMAN-MACHINE SYSTEMS, 2022. URL, DOI BibTeX

@article{10.1109/THMS.2021.3137035, id = "10.1109/THMS.2021.3137035", doi = "10.1109/THMS.2021.3137035", url = "https://ieeexplore.ieee.org/abstract/document/9682521", abstract = "This article proposes a novel shared intelligence system for brain-machine interface (BMI) teleoperated mobile robots where user’s intention and robot’s intelligence are concurrent elements equally participating in the decision process. We designed the system to rely on policies guiding the robot’s behavior according to the current situation. We hypothesized that the fusion of these policies would lead to the identification of the next, most probable, location of the robot in accordance with the user’s expectations. We asked 13 healthy subjects to evaluate the system during teleoperated navigation tasks in a crowded office environment with a keyboard (reliable interface) and with 2-class motor imagery (MI) BMI (uncertain control channel). Experimental results show that our shared intelligence system 1) allows users to efficiently teleoperate the robot in both control modalities; 2) it ensures a level of BMI navigation performances comparable to the keyboard control; 3) it actively assists BMI users in accomplishing the tasks. These results highlight the importance of investigating advanced human-machine interaction (HMI) strategies and introducing robotic intelligence to improve the performances of BMI actuated devices.", journal = "IEEE TRANSACTIONS ON HUMAN-MACHINE SYSTEMS", year = 2022, title = "Shared Intelligence for Robot Teleoperation via BMI", author = "Beraldo, Gloria and Tonin, Luca and Millán, José del R. and Menegatti, Emanuele" }Stefano Tortora, Gloria Beraldo, Francesco Bettella, Emanuela Formaggio, Maria Rubega, Alessandra Del Felice, Stefano Masiero, Ruggero Carli, Nicola Petrone, Emanuele Menegatti and Luca Tonin. Neural correlates of user learning during long-term BCI training for the Cybathlon competition. JOURNAL OF NEUROENGINEERING AND REHABILITATION 19, 2022. URL, DOI BibTeX

@article{10.1186/s12984-022-01047-x, id = "10.1186/s12984-022-01047-x", doi = "10.1186/s12984-022-01047-x", url = "https://jneuroengrehab.biomedcentral.com/articles/10.1186/s12984-022-01047-x", keywords = "Brain-computer interface; Cybathlon; Long-term evaluation; Motor imagery; Mutual learning; Riemann geometry; User learning; Brain; Electroencephalography; Humans; Machine Learning; Reproducibility of Results; Brain-Computer Interfaces", abstract = "Brain-computer interfaces (BCIs) are systems capable of translating human brain patterns, measured through electroencephalography (EEG), into commands for an external device. Despite the great advances in machine learning solutions to enhance the performance of BCI decoders, the translational impact of this technology remains elusive. The reliability of BCIs is often unsatisfactory for end-users, limiting their application outside a laboratory environment.", volume = 19, journal = "JOURNAL OF NEUROENGINEERING AND REHABILITATION", year = 2022, title = "Neural correlates of user learning during long-term BCI training for the Cybathlon competition", author = "Tortora, Stefano and Beraldo, Gloria and Bettella, Francesco and Formaggio, Emanuela and Rubega, Maria and Del Felice, Alessandra and Masiero, Stefano and Carli, Ruggero and Petrone, Nicola and Menegatti, Emanuele and Tonin, Luca" }A Saviolo, M Bonotto, D Evangelista, M Imperoli, J Lazzaro, E Menegatti and A Pretto. Learning to Segment Human Body Parts with Synthetically Trained Deep Convolutional Networks. In Lecture Notes in Networks and Systems 412. 2022, 696–712. DOI BibTeX

@conference{10.1007/978-3-030-95892-3_52, id = "10.1007/978-3-030-95892-3_52", pages = "696--712", isbn = "978-3-030-95891-6", doi = "10.1007/978-3-030-95892-3_52", keywords = "Deep learning; Foreground segmentation; Human body part segmentation; Semantic segmentation; Synthetic datasets", abstract = "This paper presents a new framework for human body part segmentation based on Deep Convolutional Neural Networks trained using only synthetic data. The proposed approach achieves cutting-edge results without the need of training the models with real annotated data of human body parts. Our contributions include a data generation pipeline, that exploits a game engine for the creation of the synthetic data used for training the network, and a novel pre-processing module, that combines edge response maps and adaptive histogram equalization to guide the network to learn the shape of the human body parts ensuring robustness to changes in the illumination conditions. For selecting the best candidate architecture, we perform exhaustive tests on manually annotated images of real human body limbs. We further compare our method against several high-end commercial segmentation tools on the body parts segmentation task. The results show that our method outperforms the other models by a significant margin. Finally, we present an ablation study to validate our pre-processing module. With this paper, we release an implementation of the proposed approach along with the acquired datasets.", booktitle = "Lecture Notes in Networks and Systems", volume = 412, publisher = "Springer Science and Business Media Deutschland GmbH", year = 2022, title = "Learning to Segment Human Body Parts with Synthetically Trained Deep Convolutional Networks", author = "Saviolo, A. and Bonotto, M. and Evangelista, D. and Imperoli, M. and Lazzaro, J. and Menegatti, E. and Pretto, A." }Gloria Beraldo, Kenji Koide, Amedeo Cesta, Satoshi Hoshino, Jun Miura, Matteo Salvà and Emanuele Menegatti. Shared autonomy for telepresence robots based on people-aware navigation. In Proceedings of IEEE 16th international conference on Intelligent Autonomous System (IAS). 2022. URL, DOI BibTeX

@conference{10.1007/978-3-030-95892-3_9, id = "10.1007/978-3-030-95892-3_9", doi = "10.1007/978-3-030-95892-3_9", url = "https://link.springer.com/chapter/10.1007/978-3-030-95892-3_9", keywords = "Shared-autonomy, People-aware, Social navigation, Dynamic environments, Telepresence robots", booktitle = "Proceedings of IEEE 16th international conference on Intelligent Autonomous System (IAS)", year = 2022, title = "Shared autonomy for telepresence robots based on people-aware navigation", author = "Beraldo, Gloria and Koide, Kenji and Cesta, Amedeo and Hoshino, Satoshi and Miura, Jun and Salvà, Matteo and Menegatti, Emanuele" }

2021

G Maguolo, L Nanni and S Ghidoni. Ensemble of convolutional neural networks trained with different activation functions. EXPERT SYSTEMS WITH APPLICATIONS 166, 2021. DOI BibTeX

@article{10.1016/j.eswa.2020.114048, doi = "10.1016/j.eswa.2020.114048", keywords = "Activation functions; Ensemble; MeLU; Neural networks", abstract = "Activation functions play a vital role in the training of Convolutional Neural Networks. For this reason, developing efficient and well-performing functions is a crucial problem in the deep learning community. The idea of these approaches is to allow a reliable parameter learning, avoiding vanishing gradient problems. The goal of this work is to propose an ensemble of Convolutional Neural Networks trained using several different activation functions. Moreover, a novel activation function is here proposed for the first time. Our aim is to improve the performance of Convolutional Neural Networks in small/medium sized biomedical datasets. Our results clearly show that the proposed ensemble outperforms Convolutional Neural Networks trained with a standard ReLU as activation function. The proposed ensemble outperforms with a p-value of 0.01 each tested stand-alone activation function; for reliable performance comparison we tested our approach on more than 10 datasets, using two well-known Convolutional Neural Networks: Vgg16 and ResNet50.", volume = 166, journal = "EXPERT SYSTEMS WITH APPLICATIONS", publisher = "Elsevier Ltd", year = 2021, title = "Ensemble of convolutional neural networks trained with different activation functions", author = "Maguolo, G. and Nanni, L. and Ghidoni, S.", id = "11577_3389751" }Maria Rubega, Emanuela Formaggio, Roberto Di Marco, Margherita Bertuccelli, Stefano Tortora, Emanuele Menegatti, Manuela Cattelan, Paolo Bonato, Stefano Masiero and Alessandra Del Felice. Cortical correlates in upright dynamic and static balance in the elderly. SCIENTIFIC REPORTS 11, 2021. DOI BibTeX

@article{10.1038/s41598-021-93556-3, doi = "10.1038/s41598-021-93556-3", abstract = "Falls are the second most frequent cause of injury in the elderly. Physiological processes associated with aging affect the elderly's ability to respond to unexpected balance perturbations, leading to increased fall risk. Every year, approximately 30% of adults, 65 years and older, experiences at least one fall. Investigating the neurophysiological mechanisms underlying the control of static and dynamic balance in the elderly is an emerging research area. The study aimed to identify cortical and muscular correlates during static and dynamic balance tests in a cohort of young and old healthy adults. We recorded cortical and muscular activity in nine elderly and eight younger healthy participants during an upright stance task in static and dynamic (core board) conditions. To simulate real-life dual-task postural control conditions, the second set of experiments incorporated an oddball visual task. We observed higher electroencephalographic (EEG) delta rhythm over the anterior cortex in the elderly and more diffused fast rhythms (i.e., alpha, beta, gamma) in younger participants during the static balance tests. When adding a visual oddball, the elderly displayed an increase in theta activation over the sensorimotor and occipital cortices. During the dynamic balance tests, the elderly showed the recruitment of sensorimotor areas and increased muscle activity level, suggesting a preferential motor strategy for postural control. This strategy was even more prominent during the oddball task. Younger participants showed reduced cortical and muscular activity compared to the elderly, with the noteworthy difference of a preferential activation of occipital areas that increased during the oddball task. These results support the hypothesis that different strategies are used by the elderly compared to younger adults during postural tasks, particularly when postural and cognitive tasks are combined. The knowledge gained in this study could inform the development of age-specific rehabilitative and assistive interventions.", volume = 11, journal = "SCIENTIFIC REPORTS", year = 2021, title = "Cortical correlates in upright dynamic and static balance in the elderly", author = "Rubega, Maria and Formaggio, Emanuela and Di Marco, Roberto and Bertuccelli, Margherita and Tortora, Stefano and Menegatti, Emanuele and Cattelan, Manuela and Bonato, Paolo and Masiero, Stefano and Del Felice, Alessandra", id = "11577_3395906" }Maria Rubega, Roberto Di Marco, Marianna Zampini, Emanuela Formaggio, Emanuele Menegatti, Paolo Bonato, Stefano Masiero and Alessandra Del Felice. Muscular and cortical activation during dynamic and static balance in the elderly: A scoping review. AGING BRAIN 1, 2021. URL, DOI BibTeX

@article{10.1016/j.nbas.2021.100013, doi = "10.1016/j.nbas.2021.100013", url = "https://www.sciencedirect.com/science/article/pii/S2589958921000098#ak005", keywords = "Electromyography, Electroencephalography, Balance, Older adults, Postural control", abstract = "Falls due to balance impairment are a major cause of injury and disability in the elderly. The study of neurophysiological correlates during static and dynamic balance tasks is an emerging area of research that could lead to novel rehabilitation strategies and reduce fall risk. This review aims to highlight key concepts and identify gaps in the current knowledge of balance control in the elderly that could be addressed by relying on surface electromyographic (EMG) and electroencephalographic (EEG) recordings. The neurophysiological hypotheses underlying balance studies in the elderly as well as the methodologies, findings, and limitations of prior work are herein addressed. The literature shows: 1) a wide heterogeneity in the experimental procedures, protocols, and analyses; 2) a paucity of studies involving the investigation of cortical activity; 3) aging-related alterations of cortical activation during balance tasks characterized by lower cortico-muscular coherence and increased allocation of attentional control to postural tasks in the elderly; and 4) EMG patterns characterized by delayed onset after perturbations, increased levels of activity, and greater levels of muscle co-activation in the elderly compared to younger adults. EMG and EEG recordings are valuable tools to monitor muscular and cortical activity during the performance of balance tasks. However, standardized protocols and analysis techniques should be agreed upon and shared by the scientific community to provide reliable and reproducible results. This will allow researchers to gain a comprehensive knowledge on the neurophysiological changes affecting static and dynamic balance in the elderly and will inform the design of rehabilitative and preventive interventions.", volume = 1, journal = "AGING BRAIN", publisher = "Elsevier", year = 2021, title = "Muscular and cortical activation during dynamic and static balance in the elderly: A scoping review", author = "Rubega, Maria and Di Marco, Roberto and Zampini, Marianna and Formaggio, Emanuela and Menegatti, Emanuele and Bonato, Paolo and Masiero, Stefano and Del Felice, Alessandra", id = "11577_3388698" }M Terreran and S Ghidoni. Light deep learning models enriched with Entangled features for RGB-D semantic segmentation. ROBOTICS AND AUTONOMOUS SYSTEMS 146, 2021. DOI BibTeX

@article{10.1016/j.robot.2021.103862, doi = "10.1016/j.robot.2021.103862", keywords = "Deep learning; Scene understanding; Semantic segmentation", abstract = "Semantic segmentation is a crucial task in emerging robotic applications like autonomous driving and social robotics. State-of-the-art methods in this field rely on deep learning, with several works in the literature following the trend of using larger networks to achieve higher performance. However, this leads to greater model complexity and higher computational costs, which make it difficult to integrate such models on mobile robots. In this work we investigate how it is possible to obtain lighter performing deep models introducing additional data at a very low computational cost, instead of increasing the network complexity. We consider the features used in the 3D Entangled Forests algorithm, proposing different strategies to integrate such additional information into different deep networks. The new features allow to obtain lighter and performing segmentation models, either by shrinking the network size or improving existing networks proposed for real-time segmentation. Such result represents an interesting alternative in mobile robotics application, where computational power and energy are limited.", volume = 146, journal = "ROBOTICS AND AUTONOMOUS SYSTEMS", publisher = "Elsevier B.V.", year = 2021, title = "Light deep learning models enriched with Entangled features for RGB-D semantic segmentation", author = "Terreran, M. and Ghidoni, S.", id = "11577_3400526" }Mattia Guidolin, RAZVAN ANDREI BUDAU PETREA, Roberto Oboe, Monica Reggiani, Emanuele Menegatti and Luca Tagliapietra. On the accuracy of IMUs for human motion tracking: a comparative evaluation. In Conference Proceedings - IEEE International Conference on Mechatronics. 2021. DOI BibTeX

@conference{10.1109/ICM46511.2021.9385684, doi = "10.1109/ICM46511.2021.9385684", abstract = "Inertial Measurement Units (IMUs) are becoming more and more popular in human motion tracking applications. Among the other advantages, wearability, portability, limited costs, and accuracy are the main drivers of their increasing use. These devices are nowadays well-established commercially available products, ranging from few to hundreds of Euros. The main purpose of this study is to investigate the potentialities and the limits of IMUs belonging to different commercial segments in providing accurate orientation estimates within the operating conditions characterizing the human motion. These are simulated by means of a direct drive servomotor, in order to ensure accuracy and repeatability of the whole assessment pipeline. Both static and dynamic conditions are analyzed, the latter obtained by varying frequency and amplitude of a sinusoidal motion, thus evaluating the performances for a broad set of movements. IMUs orientations are estimated through proprietary filters, when available, and then compared with the well-established Madgwick's filter, to effectively investigate the performances of the on-board sensors. Results show that the low-cost IMUs are suited for applications requiring low bandwidth, while the comparison through Madgwick's filter did not highlight appreciable differences among the IMUs.", booktitle = "Conference Proceedings - IEEE International Conference on Mechatronics", year = 2021, title = "On the accuracy of IMUs for human motion tracking: a comparative evaluation", author = "Guidolin, Mattia and BUDAU PETREA, RAZVAN ANDREI and Oboe, Roberto and Reggiani, Monica and Menegatti, Emanuele and Tagliapietra, Luca", id = "11577_3363611" }Mattia Guidolin, Emanuele Menegatti, Monica Reggiani and Luca Tagliapietra. A ROS driver for Xsens wireless inertial measurement unit systems. In Conference Proceedings - IEEE International Conference on Industrial Technology. 2021, 677–683. DOI BibTeX

@conference{10.1109/ICIT46573.2021.9453640, pages = "677--683", doi = "10.1109/ICIT46573.2021.9453640", keywords = "Human robot interaction, XSens, IMU, ROS, HiRos, Inertial Measurement Units", abstract = "This paper presents an efficient open-source driver for interfacing Xsens inertial measurement systems (in particular the Xsens MTw Awinda wireless motion trackers) with the Robot Operating System (ROS). The driver supports the simultaneous connection of up to 20 trackers, limit fixed by the Xsens software, to a master PC, and directly streams sensors data (linear accelerations, angular velocities, magnetic fields, orientations) up to 120 Hz to the ROS network through one or multiple configurable topics. Moreover, a synchronization procedure is implemented to avoid possible partial frames where the readings from one (or multiple) trackers are missing. The proposed messages are based on ROS standard ones and comply with the ROS developer guidelines. This guarantees the compatibility of any ROS package requiring as input ROS messages with the proposed driver, thus effectively integrating Xsens inertial measurement systems with the ROS ecosystem. This work aims to push forward the development of a large variety of human-robot interaction applications where accurate real-time knowledge of human motion is crucial.", booktitle = "Conference Proceedings - IEEE International Conference on Industrial Technology", year = 2021, title = "A ROS driver for Xsens wireless inertial measurement unit systems", author = "Guidolin, Mattia and Menegatti, Emanuele and Reggiani, Monica and Tagliapietra, Luca", id = "11577_3363610" }Alberto Bacchin, Gloria Beraldo and Emanuele Menegatti. Learning to plan people-aware trajectories for robot navigation: A genetic algorithm. In Proceedings of IEEE European Conference on Mobile Robots (EMCR). 2021. URL, DOI BibTeX

@conference{10.1109/ECMR50962.2021.9568804, isbn = "978-166541213-1", doi = "10.1109/ECMR50962.2021.9568804", url = "https://ieeexplore.ieee.org/document/9568804", abstract = "Nowadays, one of the emergent challenges in mobile robotics consists of navigating safely and efficiently in dynamic environments populated by people. This paper focuses on the robot's motion planning by proposing a learning-based method to adjust the robot's trajectories to people's movements by respecting the proxemics rules. With this purpose, we design a genetic algorithm to train the navigation stack of ROS during the goal-based navigation while the robot is disturbed by people. We also present a simulation environment based on Gazebo that extends the animated model for emulating a more natural human's walking. Preliminary results show that our approach is able to plan people-aware robot's trajectories respecting proxemics limits without worsening the performance in navigation.", booktitle = "Proceedings of IEEE European Conference on Mobile Robots (EMCR)", year = 2021, title = "Learning to plan people-aware trajectories for robot navigation: A genetic algorithm", author = "Bacchin, Alberto and Beraldo, Gloria and Menegatti, Emanuele", id = "11577_3402573" }Stefano Tortora, Maria Rubega, Emanuela Formaggio, Roberto Di Marco, Stefano Masiero, Emanuele Menegatti, Luca Tonin and Alessandra Del Felice. Age-related differences in visual P300 ERP during dual-task postural balance. In 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) 2021. 2021, 6511-6514–6514. URL, DOI BibTeX

@conference{10.1109/EMBC46164.2021.9630088, pages = "6511-6514--6514", isbn = "978-1-7281-1179-7", doi = "10.1109/EMBC46164.2021.9630088", url = "https://ieeexplore.ieee.org/document/9630088", abstract = "Standing and concurrently performing a cognitive task is a very common situation in everyday life. It is associated with a higher risk of falling in the elderly. Here, we aim at evaluating the differences of the P300 evoked potential elicited by a visual oddball paradigm between healthy younger (< 35 y) and older (> 64 y) adults during a simultaneous postural task. We found that P300 latency increases significantly (p < 0.001) when the elderly are engaged in more challenging postural tasks; younger adults show no effect of balance condition. Our results demonstrate that, even if the elderly have the same accuracy in odd stimuli detection as younger adults do, they require a longer processing time for stimulus discrimination. This finding suggests an increased attentional load which engages additional cerebral reserves.", booktitle = "2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC)", volume = 2021, year = 2021, title = "Age-related differences in visual P300 ERP during dual-task postural balance", author = "Tortora, Stefano and Rubega, Maria and Formaggio, Emanuela and Di Marco, Roberto and Masiero, Stefano and Menegatti, Emanuele and Tonin, Luca and Del Felice, Alessandra", id = "11577_3409536" }R Di Marco, M Rubega, O Lennon, E Formaggio, N Sutaj, G Dazzi, C Venturin, I Bonini, R Ortner, H A C Bazo, L Tonin, S Tortora, S Masiero and A Del Felice. Experimental protocol to assess neuromuscular plasticity induced by an exoskeleton training session. METHODS AND PROTOCOLS 4, 2021. DOI BibTeX

@article{10.3390/mps4030048, doi = "10.3390/mps4030048", keywords = "Aging; EEG; EMG; Exoskeleton; Neuromuscular plasticity; Rehabilitation; Stroke", abstract = "Exoskeleton gait rehabilitation is an emerging area of research, with potential applications in the elderly and in people with central nervous system lesions, e.g., stroke, traumatic brain/spinal cord injury. However, adaptability of such technologies to the user is still an unmet goal. Despite important technological advances, these robotic systems still lack the fine tuning necessary to adapt to the physiological modification of the user and are not yet capable of a proper human-machine interaction. Interfaces based on physiological signals, e.g., recorded by electroencephalography (EEG) and/or electromyography (EMG), could contribute to solving this technological challenge. This protocol aims to: (1) quantify neuro-muscular plasticity induced by a single training session with a robotic exoskeleton on post-stroke people and on a group of age and sex-matched controls; (2) test the feasibility of predicting lower limb motor trajectory from physiological signals for future use as control signal for the robot. An active exoskeleton that can be set in full mode (i.e., the robot fully replaces and drives the user motion), adaptive mode (i.e., assistance to the user can be tuned according to his/her needs), and free mode (i.e., the robot completely follows the user movements) will be used. Participants will undergo a preparation session, i.e., EMG sensors and EEG cap placement and inertial sensors attachment to measure, respectively, muscular and cortical activity, and motion. They will then be asked to walk in a 15 m corridor: (i) self-paced without the exoskeleton (pre-training session); (ii) wearing the exoskeleton and walking with the three modes of use; (iii) self-paced without the exoskeleton (post-training session). From this dataset, we will: (1) quantitatively estimate short-term neuroplasticity of brain connectivity in chronic stroke survivors after a single session of gait training; (2) compare muscle activation patterns during exoskeleton-gait between stroke survivors and age and sex-matched controls; and (3) perform a feasibility analysis on the use of physiological signals to decode gait intentions.", volume = 4, journal = "METHODS AND PROTOCOLS", publisher = "MDPI AG", year = 2021, title = "Experimental protocol to assess neuromuscular plasticity induced by an exoskeleton training session", author = "Di Marco, R. and Rubega, M. and Lennon, O. and Formaggio, E. and Sutaj, N. and Dazzi, G. and Venturin, C. and Bonini, I. and Ortner, R. and Bazo, H. A. C. and Tonin, L. and Tortora, S. and Masiero, S. and Del Felice, A.", id = "11577_3408171" }Gloria Beraldo, Luca Tonin, Amedeo Cesta and Emanuele Menegatti. Brain-Driven Telepresence Robots: A Fusion of User’s Commands with Robot’s Intelligence. In 19th Proceedings of the International Conference of the Italian Association for Artificial Intelligence 12414. 2021, 235–248. URL, DOI BibTeX

@conference{10.1007/978-3-030-77091-4_15, pages = "235--248", isbn = "978-3-030-77090-7", doi = "10.1007/978-3-030-77091-4_15", url = "https://link.springer.com/chapter/10.1007/978-3-030-77091-4_15", booktitle = "19th Proceedings of the International Conference of the Italian Association for Artificial Intelligence", volume = 12414, year = 2021, title = "Brain-Driven Telepresence Robots: A Fusion of User’s Commands with Robot’s Intelligence", author = "Beraldo, Gloria and Tonin, Luca and Cesta, Amedeo and Menegatti, Emanuele", id = "11577_3395898" }Gloria Beraldo, Luca Tonin and Emanuele Menegatti. Shared Intelligence for User-Supervised Robots: From User’s Commands to Robot’s Actions. In 19th Proceedings of the International Conference of the Italian Association for Artificial Intelligence 12414. 2021, 457–465. URL, DOI BibTeX

@conference{10.1007/978-3-030-77091-4_27, pages = "457--465", isbn = "978-3-030-77090-7", doi = "10.1007/978-3-030-77091-4_27", url = "https://link.springer.com/chapter/10.1007/978-3-030-77091-4_27", booktitle = "19th Proceedings of the International Conference of the Italian Association for Artificial Intelligence", volume = 12414, year = 2021, title = "Shared Intelligence for User-Supervised Robots: From User’s Commands to Robot’s Actions", author = "Beraldo, Gloria and Tonin, Luca and Menegatti, Emanuele", id = "11577_3395897" }Gloria Beraldo, Luca Tonin, Amedeo Cesta and Emanuele Menegatti. Shared approaches to mentally drive telepresence robots. In Conference Proceedings - 7th Italian Workshop on Artificial Intelligence and Robotics (AIRO 2020). 2021. URL BibTeX

@conference{, 11577_3359960pages = "22--27", url = "https://www.scopus.com/record/display.uri?eid=2-s2.0-85101290718&origin=AuthorEval&zone=hIndex-DocumentList", abstract = "Recently there has been a growing interest in designing human-in-the-loop applicationsbased on shared approachesthat fuse the user’s commands with the perception of the context. In this scenario, we focus on user-supervised telepresence robots, designed to improvethe quality of life of peoplesuffering from severe physical disabilities or elderlywho cannot move anymore.In this regard, we introduce brain-machine interfaces that enable usersto directly control the robot through their brain activity. Since the nature of this interface, characterized by low bit rate and noise, herein, we present different methodologiestoaugment the human-robot interaction and to facilitate the research and the development of these technologies.", booktitle = "Conference Proceedings - 7th Italian Workshop on Artificial Intelligence and Robotics (AIRO 2020)", year = 2021, title = "Shared approaches to mentally drive telepresence robots", author = "Beraldo, Gloria and Tonin, Luca and Cesta, Amedeo and Menegatti, Emanuele", id = "11577_3359960" }A Pretto, S Aravecchia, W Burgard, N Chebrolu, C Dornhege, T Falck, F V Fleckenstein, A Fontenla, M Imperoli, R Khanna, F Liebisch, P Lottes, A Milioto, D Nardi, S Nardi, J Pfeifer, M Popovic, C Potena, C Pradalier, E Rothacker-Feder, I Sa, A Schaefer, R Siegwart, C Stachniss, A Walter, W Winterhalter, X Wu and J Nieto. Building an Aerial-Ground Robotics System for Precision Farming: An Adaptable Solution. IEEE ROBOTICS AND AUTOMATION MAGAZINE 28:29–49, 2021. DOI BibTeX

@article{10.1109/MRA.2020.3012492, pages = "29--49", doi = "10.1109/MRA.2020.3012492", volume = 28, journal = "IEEE ROBOTICS AND AUTOMATION MAGAZINE", publisher = "Institute of Electrical and Electronics Engineers Inc.", year = 2021, title = "Building an Aerial-Ground Robotics System for Precision Farming: An Adaptable Solution", author = "Pretto, A. and Aravecchia, S. and Burgard, W. and Chebrolu, N. and Dornhege, C. and Falck, T. and Fleckenstein, F. V. and Fontenla, A. and Imperoli, M. and Khanna, R. and Liebisch, F. and Lottes, P. and Milioto, A. and Nardi, D. and Nardi, S. and Pfeifer, J. and Popovic, M. and Potena, C. and Pradalier, C. and Rothacker-Feder, E. and Sa, I. and Schaefer, A. and Siegwart, R. and Stachniss, C. and Walter, A. and Winterhalter, W. and Wu, X. and Nieto, J.", id = "11577_3392373" }N Castaman, E Pagello, E Menegatti and A Pretto. Receding Horizon Task and Motion Planning in Changing Environments. ROBOTICS AND AUTONOMOUS SYSTEMS 145, 2021. DOI BibTeX

@article{10.1016/j.robot.2021.103863, doi = "10.1016/j.robot.2021.103863", keywords = "Non-static Environments; Robot manipulation; Task and Motion Planning", abstract = "Complex manipulation tasks require careful integration of symbolic reasoning and motion planning. This problem, commonly referred to as Task and Motion Planning (TAMP), is even more challenging if the workspace is non-static, e.g. due to human interventions and perceived with noisy non-ideal sensors. This work proposes an online approximated TAMP method that combines a geometric reasoning module and a motion planner with a standard task planner in a receding horizon fashion. Our approach iteratively solves a reduced planning problem over a receding window of a limited number of future actions during the implementation of the actions. Thus, only the first action of the horizon is actually scheduled at each iteration, then the window is moved forward, and the problem is solved again. This procedure allows to naturally take into account potential changes in the scene while ensuring good runtime performance. We validate our approach within extensive experiments in a simulated environment. We showed that our approach is able to deal with unexpected changes in the environment while ensuring comparable performance with respect to other recent TAMP approaches in solving traditional static benchmarks. We release with this paper the open-source implementation of our method.", volume = 145, journal = "ROBOTICS AND AUTONOMOUS SYSTEMS", publisher = "Elsevier B.V.", year = 2021, title = "Receding Horizon Task and Motion Planning in Changing Environments", author = "Castaman, N. and Pagello, E. and Menegatti, E. and Pretto, A.", id = "11577_3397909" }Matteo Terreran, Daniele Evangelista, Jacopo Lazzaro and Alberto Pretto. Make It Easier: An Empirical Simplification of a Deep 3D Segmentation Network for Human Body Parts. Markus Vincze, Timothy Patten, Henrik I Christensen, Lazaros Nalpantidis, Ming Liu, 2021. DOI BibTeX

@inbook{10.1007/978-3-030-87156-7_12, isbn = "978-3-030-87156-7", doi = "10.1007/978-3-030-87156-7_12", booktitle = "2021 13th IEEE International Conference on Computer Vision Systems (ICVS)", publisher = "Markus Vincze, Timothy Patten, Henrik I Christensen, Lazaros Nalpantidis, Ming Liu", year = 2021, title = "Make It Easier: An Empirical Simplification of a Deep 3D Segmentation Network for Human Body Parts", author = "Terreran, Matteo and Evangelista, Daniele and Lazzaro, Jacopo and Pretto, Alberto", id = "11577_3400531" }M Fawakherji, C Potena, A Pretto, D D Bloisi and D Nardi. Multi-Spectral Image Synthesis for Crop/Weed Segmentation in Precision Farming. ROBOTICS AND AUTONOMOUS SYSTEMS 146, 2021. DOI BibTeX

@article{10.1016/j.robot.2021.103861, doi = "10.1016/j.robot.2021.103861", keywords = "Agricultural robotics; cGANs; Crop/weed detection; Semantic segmentation", abstract = "An effective perception system is a fundamental component for farming robots, as it enables them to properly perceive the surrounding environment and to carry out targeted operations. The most recent methods make use of state-of-the-art machine learning techniques to learn a valid model for the target task. However, those techniques need a large amount of labeled data for training. A recent approach to deal with this issue is data augmentation through Generative Adversarial Networks (GANs), where entire synthetic scenes are added to the training data, thus enlarging and diversifying their informative content. In this work, we propose an alternative solution with respect to the common data augmentation methods, applying it to the fundamental problem of crop/weed segmentation in precision farming. Starting from real images, we create semi-artificial samples by replacing the most relevant object classes (i.e., crop and weeds) with their synthesized counterparts. To do that, we employ a conditional GAN (cGAN), where the generative model is trained by conditioning the shape of the generated object. Moreover, in addition to RGB data, we take into account also near-infrared (NIR) information, generating four channel multi-spectral synthetic images. Quantitative experiments, carried out on three publicly available datasets, show that (i) our model is capable of generating realistic multi-spectral images of plants and (ii) the usage of such synthetic images in the training process improves the segmentation performance of state-of-the-art semantic segmentation convolutional networks.", volume = 146, journal = "ROBOTICS AND AUTONOMOUS SYSTEMS", publisher = "Elsevier B.V.", year = 2021, title = "Multi-Spectral Image Synthesis for Crop/Weed Segmentation in Precision Farming", author = "Fawakherji, M. and Potena, C. and Pretto, A. and Bloisi, D. D. and Nardi, D.", id = "11577_3399543" }

2020

M Antonello, S Chiesurin and S Ghidoni. Enhancing semantic segmentation with detection priors and iterated graph cuts for robotics. ENGINEERING APPLICATIONS OF ARTIFICIAL INTELLIGENCE 90:1–14, 2020. DOI BibTeX

@article{10.1016/j.engappai.2019.103467, pages = "1--14", doi = "10.1016/j.engappai.2019.103467", keywords = "Mapping; Object detection; Segmentation and categorization; Semantic scene understanding", abstract = "To foster human–robot interaction, autonomous robots need to understand the environment in which they operate. In this context, one of the main challenges is semantic segmentation, together with the recognition of important objects, which can aid robots during exploration, as well as when planning new actions and interacting with the environment. In this study, we extend a multi-view semantic segmentation system based on 3D Entangled Forests (3DEF) by integrating and refining two object detectors, Mask R-CNN and You Only Look Once (YOLO), with Bayesian fusion and iterated graph cuts. The new system takes the best of its components, successfully exploiting both 2D and 3D data. Our experiments show that our approach is competitive with the state-of-the-art and leads to accurate semantic segmentations.", volume = 90, journal = "ENGINEERING APPLICATIONS OF ARTIFICIAL INTELLIGENCE", publisher = "Elsevier Ltd", year = 2020, title = "Enhancing semantic segmentation with detection priors and iterated graph cuts for robotics", author = "Antonello, M. and Chiesurin, S. and Ghidoni, S.", id = "11577_3333945" }Paolo Franceschi, Nicola Castaman, Stefano Ghidoni and Nicola Pedrocchi. Precise Robotic Manipulation of Bulky Components. IEEE ACCESS 8:222476–222485, 2020. DOI BibTeX

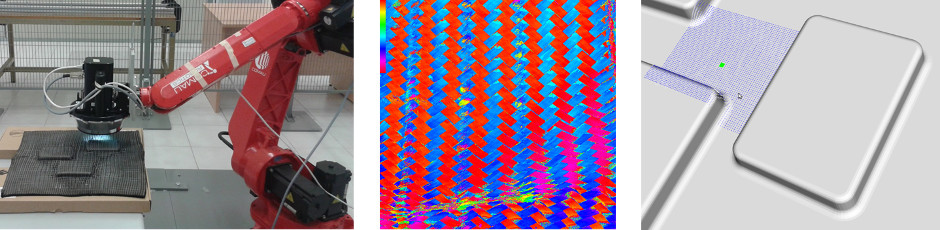

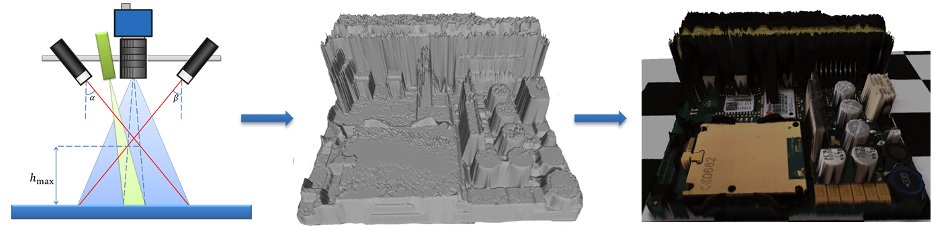

@article{10.1109/ACCESS.2020.3043069, pages = "222476--222485", doi = "10.1109/ACCESS.2020.3043069", volume = 8, journal = "IEEE ACCESS", year = 2020, title = "Precise Robotic Manipulation of Bulky Components", author = "Franceschi, Paolo and Castaman, Nicola and Ghidoni, Stefano and Pedrocchi, Nicola", id = "11577_3360183" }Loris Roveda, Nicola Castaman, Paolo Franceschi, Stefano Ghidoni and Nicola Pedrocchi. A Control Framework Definition to Overcome Position/Interaction Dynamics Uncertainties in Force-Controlled Tasks. In Proceedings of 2020 IEEE International Conference on Robotics and Automation (ICRA). 2020, 6819–6825. DOI BibTeX

@conference{10.1109/ICRA40945.2020.9197141, pages = "6819--6825", isbn = "978-1-7281-7395-5", doi = "10.1109/ICRA40945.2020.9197141", booktitle = "Proceedings of 2020 IEEE International Conference on Robotics and Automation (ICRA)", year = 2020, title = "A Control Framework Definition to Overcome Position/Interaction Dynamics Uncertainties in Force-Controlled Tasks", author = "Roveda, Loris and Castaman, Nicola and Franceschi, Paolo and Ghidoni, Stefano and Pedrocchi, Nicola", id = "11577_3342003" }L Nanni, A Lumini, S Ghidoni and G Maguolo. Stochastic selection of activation layers for convolutional neural networks. SENSORS 20:1–15, 2020. DOI BibTeX

@article{10.3390/s20061626, pages = "1--15", doi = "10.3390/s20061626", keywords = "Activation functions; Convolutional Neural Networks; Ensemble of classifiers; Image classification; Skin detection", abstract = "In recent years, the field of deep learning has achieved considerable success in pattern recognition, image segmentation, and many other classification fields. There are many studies and practical applications of deep learning on images, video, or text classification. Activation functions play a crucial role in discriminative capabilities of the deep neural networks and the design of new “static” or “dynamic” activation functions is an active area of research. The main difference between “static” and “dynamic” functions is that the first class of activations considers all the neurons and layers as identical, while the second class learns parameters of the activation function independently for each layer or even each neuron. Although the “dynamic” activation functions perform better in some applications, the increased number of trainable parameters requires more computational time and can lead to overfitting. In this work, we propose a mixture of “static” and “dynamic” activation functions, which are stochastically selected at each layer. Our idea for model design is based on a method for changing some layers along the lines of different functional blocks of the best performing CNN models, with the aim of designing new models to be used as stand-alone networks or as a component of an ensemble. We propose to replace each activation layer of a CNN (usually a ReLU layer) by a different activation function stochastically drawn from a set of activation functions: in this way, the resulting CNN has a different set of activation function layers. The code developed for this work will be available at https://github.com/LorisNanni.", volume = 20, journal = "SENSORS", publisher = "MDPI AG", year = 2020, title = "Stochastic selection of activation layers for convolutional neural networks", author = "Nanni, L. and Lumini, A. and Ghidoni, S. and Maguolo, G.", id = "11577_3334022" }K Koide and E Menegatti. Non-overlapping RGB-D camera network calibration with monocular visual odometry. In IEEE International Conference on Intelligent Robots and Systems. 2020, 9005–9011. DOI BibTeX

@conference{10.1109/IROS45743.2020.9340825, pages = "9005--9011", isbn = "978-1-7281-6212-6", doi = "10.1109/IROS45743.2020.9340825", abstract = "This paper describes a calibration method for RGB-D camera networks consisting of not only static overlapping, but also dynamic and non-overlapping cameras. The proposed method consists of two steps: online visual odometry-based calibration and depth image-based calibration refinement. It first estimates the transformations between overlapping cameras using fiducial tags, and bridges non-overlapping camera views through visual odometry that runs on a dynamic monocular camera. Parameters such as poses of the static cameras and tags, as well as dynamic camera trajectory, are estimated in the form of the pose graph-based online landmark SLAM. Then, depth-based ICP and floor constraints are added to the pose graph to compensate for the visual odometry error and refine the calibration result. The proposed method is validated through evaluation in simulated and real environments, and a person tracking experiment is conducted to demonstrate the data integration of static and dynamic cameras.", booktitle = "IEEE International Conference on Intelligent Robots and Systems", publisher = "Institute of Electrical and Electronics Engineers Inc.", year = 2020, title = "Non-overlapping RGB-D camera network calibration with monocular visual odometry", author = "Koide, K. and Menegatti, E.", id = "11577_3389558" }Y Zhao, T Birdal, J E Lenssen, E Menegatti, L Guibas and F Tombari. Quaternion Equivariant Capsule Networks for 3D Point Clouds. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 12346. 2020, 1–19. DOI BibTeX

@conference{10.1007/978-3-030-58452-8_1, pages = "1--19", isbn = "978-3-030-58451-1", doi = "10.1007/978-3-030-58452-8_1", keywords = "3D; Disentanglement; Equivariance; Quaternion; Rotation", abstract = "We present a 3D capsule module for processing point clouds that is equivariant to 3D rotations and translations, as well as invariant to permutations of the input points. The operator receives a sparse set of local reference frames, computed from an input point cloud and establishes end-to-end transformation equivariance through a novel dynamic routing procedure on quaternions. Further, we theoretically connect dynamic routing between capsules to the well-known Weiszfeld algorithm, a scheme for solving iterative re-weighted least squares (IRLS) problems with provable convergence properties. It is shown that such group dynamic routing can be interpreted as robust IRLS rotation averaging on capsule votes, where information is routed based on the final inlier scores. Based on our operator, we build a capsule network that disentangles geometry from pose, paving the way for more informative descriptors and a structured latent space. Our architecture allows joint object classification and orientation estimation without explicit supervision of rotations. We validate our algorithm empirically on common benchmark datasets.", booktitle = "Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics)", volume = 12346, publisher = "Springer Science and Business Media Deutschland GmbH", year = 2020, title = "Quaternion Equivariant Capsule Networks for 3D Point Clouds", author = "Zhao, Y. and Birdal, T. and Lenssen, J. E. and Menegatti, E. and Guibas, L. and Tombari, F.", id = "11577_3389570" }F Just, O Ozen, S Tortora, V Klamroth-Marganska, R Riener and G Rauter. Human arm weight compensation in rehabilitation robotics: Efficacy of three distinct methods. JOURNAL OF NEUROENGINEERING AND REHABILITATION 17, 2020. URL, DOI BibTeX

@article{10.1186/s12984-020-0644-3, doi = "10.1186/s12984-020-0644-3", url = "https://jneuroengrehab.biomedcentral.com/articles/10.1186/s12984-020-0644-3#citeas", keywords = "Arm weight compensation; EMG; Rehabilitation robotics; Stroke; Workspace assessment", abstract = "Background: Arm weight compensation with rehabilitation robots for stroke patients has been successfully used to increase the active range of motion and reduce the effects of pathological muscle synergies. However, the differences in structure, performance, and control algorithms among the existing robotic platforms make it hard to effectively assess and compare human arm weight relief. In this paper, we introduce criteria for ideal arm weight compensation, and furthermore, we propose and analyze three distinct arm weight compensation methods (Average, Full, Equilibrium) in the arm rehabilitation exoskeleton 'ARMin'. The effect of the best performing method was validated in chronic stroke subjects to increase the active range of motion in three dimensional space. Methods: All three methods are based on arm models that are generalizable for use in different robotic devices and allow individualized adaptation to the subject by model parameters. The first method Average uses anthropometric tables to determine subject-specific parameters. The parameters for the second method Full are estimated based on force sensor data in predefined resting poses. The third method Equilibrium estimates parameters by optimizing an equilibrium of force/torque equations in a predefined resting pose. The parameters for all three methods were first determined and optimized for temporal and spatial estimation sensitivity. Then, the three methods were compared in a randomized single-center study with respect to the remaining electromyography (EMG) activity of 31 healthy participants who performed five arm poses covering the full range of motion with the exoskeleton robot. The best method was chosen for feasibility tests with three stroke patients. In detail, the influence of arm weight compensation on the three dimensional workspace was assessed by measuring of the horizontal workspace at three different height levels in stroke patients. Results: All three arm weight compensation methods reduced the mean EMG activity of healthy subjects to at least 49% compared with the no compensation reference. The Equilibrium method outperformed the Average and the Full methods with a highly significant reduction in mean EMG activity by 19% and 28% respectively. However, upon direct comparison, each method has its own individual advantages such as in set-up time, cost, or required technology. The horizontal workspace assessment in poststroke patients with the Equilibrium method revealed potential workspace size-dependence of arm height, while weight compensation helped maximize the workspace as much as possible. Conclusion: Different arm weight compensation methods were developed according to initially defined criteria. The methods were then analyzed with respect to their sensitivity and required technology. In general, weight compensation performance improved with the level of technology, but increased cost and calibration efforts. This study reports a systematic way to analyze the efficacy of different weight compensation methods using EMG. Additionally, the feasibility of the best method, Equilibrium, was shown by testing with three stroke patients. In this test, a height dependence of the workspace size also seemed to be present, which further highlights the importance of patient-specific weight compensation, particularly for training at different arm heights. Trial registration: ClinicalTrials.gov,NCT02720341. Registered 25 March 2016", volume = 17, journal = "JOURNAL OF NEUROENGINEERING AND REHABILITATION", publisher = "BioMed Central Ltd.", year = 2020, title = "Human arm weight compensation in rehabilitation robotics: Efficacy of three distinct methods", author = "Just, F. and Ozen, O. and Tortora, S. and Klamroth-Marganska, V. and Riener, R. and Rauter, G.", id = "11577_3342656" }S Tortora, S Ghidoni, C Chisari, S Micera and F Artoni. Deep learning-based BCI for gait decoding from EEG with LSTM recurrent neural network. JOURNAL OF NEURAL ENGINEERING 17:1–14, 2020. DOI BibTeX

@article{10.1088/1741-2552/ab9842, pages = "1--14", doi = "10.1088/1741-2552/ab9842", keywords = "brain-computer interface (BCI); deep learning; electroencephalography (EEG); locomotion; longshort term memory (LSTM); mobile brain/body imaging (MoBI)", abstract = "Objective. Mobile Brain/Body Imaging (MoBI) frameworks allowed the research community to find evidence of cortical involvement at walking initiation and during locomotion. However, the decoding of gait patterns from brain signals remains an open challenge. The aim of this work is to propose and validate a deep learning model to decode gait phases from Electroenchephalography (EEG). Approach. A Long-Short Term Memory (LSTM) deep neural network has been trained to deal with time-dependent information within brain signals during locomotion. The EEG signals have been preprocessed by means of Artifacts Subspace Reconstruction (ASR) and Reliable Independent Component Analysis (RELICA) to ensure that classification performance was not affected by movement-related artifacts. Main results. The network was evaluated on the dataset of 11 healthy subjects walking on a treadmill. The proposed decoding approach shows a robust reconstruction (AUC > 90%) of gait patterns (i.e. swing and stance states) of both legs together, or of each leg independently. Significance. Our results support for the first time the use of a memory-based deep learning classifier to decode walking activity from non-invasive brain recordings. We suggest that this classifier, exploited in real time, can be a more effective input for devices restoring locomotion in impaired people.", volume = 17, journal = "JOURNAL OF NEURAL ENGINEERING", publisher = "Institute of Physics Publishing", year = 2020, title = "Deep learning-based BCI for gait decoding from EEG with LSTM recurrent neural network", author = "Tortora, S. and Ghidoni, S. and Chisari, C. and Micera, S. and Artoni, F.", id = "11577_3351210" }Matteo Terreran, Elia Bonetto and Stefano Ghidoni. Enhancing Deep Semantic Segmentation of RGB-D Data with Entangled Forests. In 2020 25th IEEE International Conference on Pattern Recognition (ICPR). 2020, 4634–4641. DOI BibTeX

@conference{10.1109/ICPR48806.2021.9412787, pages = "4634--4641", isbn = "978-1-7281-8809-6", doi = "10.1109/ICPR48806.2021.9412787", keywords = "semantic segmentation, scene understanding, deep learning", abstract = "Semantic segmentation is a problem which is getting more and more attention in the computer vision community. Nowadays, deep learning methods represent the state of the art to solve this problem, and the trend is to use deeper networks to get higher performance. The drawback with such models is a higher computational cost, which makes it difficult to integrate them on mobile robot platforms. In this work we want to explore how to obtain lighter deep learning models without compromising performance. To do so we will consider the features used in the 3D Entangled Forests algorithm and we will study the best strategies to integrate these within FuseNet deep network. Such new features allow us to shrink the network size without loosing performance, obtaining hence a lighter model which achieves state-of-the-art performance on the semantic segmentation task and represents an interesting alternative for mobile robotics applications, where computational power and energy are limited.", booktitle = "2020 25th IEEE International Conference on Pattern Recognition (ICPR)", year = 2020, title = "Enhancing Deep Semantic Segmentation of RGB-D Data with Entangled Forests", author = "Terreran, Matteo and Bonetto, Elia and Ghidoni, Stefano", id = "11577_3373551" }Matteo Terreran, Edoardo Lamon, Stefano Michieletto and Enrico Pagello. Low-cost Scalable People Tracking System for Human-Robot Collaboration in Industrial Environment. PROCEDIA MANUFACTURING 51:116–124, 2020. URL, DOI BibTeX

@article{https://doi.org/10.1016/j.promfg.2020.10.018, pages = "116--124", doi = "https://doi.org/10.1016/j.promfg.2020.10.018", url = "http://www.sciencedirect.com/science/article/pii/S2351978920318734", keywords = "People tracking, human-robot collaboration, low-cost industrial", abstract = "Human-robot collaboration is one of the key elements in the Industry 4.0 revolution, aiming to a close and direct collaboration between robots and human workers to reach higher productivity and improved ergonomics. The first step toward such kind of collaboration in the industrial context is the removal of physical safety barriers usually surrounding standard robotic cells, so that human workers can approach and directly collaborate with robots. Anyway, human safety must be granted avoiding possible collisions with the robot. In this work, we propose the use of a people tracking algorithm to monitor people moving around a robot manipulator and recognize when a person is too close to the robot while performing a task. The system is implemented by a camera network system positioned around the robot workspace, and thoroughly evaluated in different industry-like settings in terms of both tracking accuracy and detection delay.", volume = 51, journal = "PROCEDIA MANUFACTURING", year = 2020, title = "Low-cost Scalable People Tracking System for Human-Robot Collaboration in Industrial Environment", author = "Terreran, Matteo and Lamon, Edoardo and Michieletto, Stefano and Pagello, Enrico", id = "11577_3358830" }G Giruzzi, M Yoshida, N Aiba, J F Artaud, J Ayllon-Guerola, O Beeke, A Bierwage, T Bolzonella, M Bonotto, C Boulbe, M Chernyshova, S Coda, R Coelho, D Corona, N Cruz, S Davis, C Day, G De Tommasi, M Dibon, D Douai, D Farina, A Fassina, B Faugeras, L Figini, M Fukumoto, S Futatani, K Galazka, J Garcia, M Garcia-Muñoz, L Garzotti, L Giudicotti, N Hayashi, M Honda, K Hoshino, A Iantchenko, S Ide, S Inoue, A Isayama, E Joffrin, Y Kamada, K Kamiya, M Kashiwagi, H Kawashima, T Kobayashi, A Kojima, T Kurki-Suonio, P Lang, Ph Lauber, E Luna, G Marchiori, G Matsunaga, A Matsuyama, M Mattei, S Mazzi, A Mele, Y Miyata, S Moriyama, JOSE' FRANCISCO Morales, A Moro, T Nakano, R Neu, S Nowak, Fp Orsitto, V Ostuni, N Oyama, S Paméla, R Pasqualotto, B Pégourié, E Perelli, L Pigatto, C Piron, A Pironti, P Platania, B Ploeckl, Daniel RICCI PACIFICI, M Romanelli, G Rubino, S Sakurai, K Särkimäki, M Scannapiego, K Shinohara, J Shiraishi, S Soare, C Sozzi, T Suzuki, Y Suzuki, T Szepesi, M Takechi, K Tanaka, H Tojo, M Turnyanskiy, H Urano, M Valisa, M Vallar, J Varje, J Vega, F Villone, T Wakatsuki, T Wauters, M Wischmeier, S Yamoto and R Zagórski. Advances in the physics studies for the JT-60SA tokamak exploitation and research plan. PLASMA PHYSICS AND CONTROLLED FUSION 62, 2020. URL, DOI BibTeX

@article{10.1088/1361-6587/ab4771, doi = "10.1088/1361-6587/ab4771", url = "https://iopscience.iop.org/article/10.1088/1361-6587/ab4771", volume = 62, journal = "PLASMA PHYSICS AND CONTROLLED FUSION", year = 2020, title = "Advances in the physics studies for the JT-60SA tokamak exploitation and research plan", author = "Giruzzi, G and Yoshida, M and Aiba, N and Artaud, J F and Ayllon-Guerola, J and Beeke, O and Bierwage, A and Bolzonella, T and Bonotto, M and Boulbe, C and Chernyshova, M and Coda, S and Coelho, R and Corona, D and Cruz, N and Davis, S and Day, C and De Tommasi, G and Dibon, M and Douai, D and Farina, D and Fassina, A and Faugeras, B and Figini, L and Fukumoto, M and Futatani, S and Galazka, K and Garcia, J and Garcia-Muñoz, M and Garzotti, L and Giudicotti, L and Hayashi, N and Honda, M and Hoshino, K and Iantchenko, A and Ide, S and Inoue, S and Isayama, A and Joffrin, E and Kamada, Y and Kamiya, K and Kashiwagi, M and Kawashima, H and Kobayashi, T and Kojima, A and Kurki-Suonio, T and Lang, P and Lauber, Ph and de la Luna, E and Marchiori, G and Matsunaga, G and Matsuyama, A and Mattei, M and Mazzi, S and Mele, A and Miyata, Y and Moriyama, S and Morales, JOSE' FRANCISCO and Moro, A and Nakano, T and Neu, R and Nowak, S and Orsitto, Fp and Ostuni, V and Oyama, N and Paméla, S and Pasqualotto, R and Pégourié, B and Perelli, E and Pigatto, L and Piron, C and Pironti, A and Platania, P and Ploeckl, B and RICCI PACIFICI, Daniel and Romanelli, M and Rubino, G and Sakurai, S and Särkimäki, K and Scannapiego, M and Shinohara, K and Shiraishi, J and Soare, S and Sozzi, C and Suzuki, T and Suzuki, Y and Szepesi, T and Takechi, M and Tanaka, K and Tojo, H and Turnyanskiy, M and Urano, H and Valisa, M and Vallar, M and Varje, J and Vega, J and Villone, F and Wakatsuki, T and Wauters, T and Wischmeier, M and Yamoto, S and Zagórski, R", id = "11577_3324033" }Matteo Bonotto, Fabio Villone, Yueqiang Liu and Paolo Bettini. Matrix Based Rational Interpolation for New Coupling Scheme Between MHD and Eddy Current Numerical Models. IEEE TRANSACTIONS ON MAGNETICS, pages 1–1, 2020. DOI BibTeX

@article{10.1109/TMAG.2019.2954648, pages = "1--1", doi = "10.1109/TMAG.2019.2954648", journal = "IEEE TRANSACTIONS ON MAGNETICS", year = 2020, title = "Matrix Based Rational Interpolation for New Coupling Scheme Between MHD and Eddy Current Numerical Models", author = "Bonotto, Matteo and Villone, Fabio and Liu, Yueqiang and Bettini, Paolo", id = "11577_3325022" }Silvia Di Battista, Monica Pivetti, Michele Moro and Emanuele Menegatti. Teachers’ opinions towards Educational Robotics for special needs students: an exploratory Italian study. ROBOTICS 9 (3), 2020. URL, DOI BibTeX

@article{10.3390/robotics9030072, doi = "10.3390/robotics9030072", url = "https://www.mdpi.com/2218-6581/9/3/72/htm", keywords = "educational robotics, special needs students, educational contexts, learning support teachers, attention deficit hyperactivity disorder—ADHD, autism spectrum disorder—ASD, dyspraxia, Down syndrome—DS", abstract = "Research reveals that robotics can be a valuable tool for school students with special needs (SNs). However, to our knowledge, empirical studies on teachers’ attitudes towards educational robotics for SNs students have been very limited and, in general, do not account for the great variability in the existent difficulties of school-aged children. Our aim is to fill this research gap. This post-test empirical study assessed Italian pre-service and in-service learning support teachers’ attitudes towards the application of Educational Robotics—ER with their students with SNs at the end of a 12-h training course. The results generally showed that most teachers perceived ER as a powerful tool for children with numerous SNs, particularly for Attention Deficit Hyperactivity Disorder—ADHD, Autism Spectrum Disorder—ASD, and Dyspraxia. Looking at the differences depending on the school level, kindergarten teachers perceived that ER is mostly helpful for ASD, ADHD, Down Syndrome—DS as well as with psychological or emotional distress or the needs of foreign students. For primary school teachers, ER was mostly helpful with ADHD, Dyspraxia and ASD. For both junior secondary school teachers and high school teachers, ER was mostly helpful with ASD, Dyspraxia, and ADHD.", volume = "9 (3)", journal = "ROBOTICS", year = 2020, title = "Teachers’ opinions towards Educational Robotics for special needs students: an exploratory Italian study", author = "Di Battista, Silvia and Pivetti, Monica and Moro, Michele and Menegatti, Emanuele", id = "11577_3357753" }Kenji Koide, Jun Miura and Emanuele Menegatti. Monocular person tracking and identification with on-line deep feature selection for person following robots. ROBOTICS AND AUTONOMOUS SYSTEMS 124:1–11, 2020. DOI BibTeX

@article{10.1016/j.robot.2019.103348, pages = "1--11", doi = "10.1016/j.robot.2019.103348", keywords = "Person trackingPerson identificationMobile robot", abstract = "This paper presents a new person tracking and identification framework based on solely a monocular camera. In this framework, we first track persons in the robot coordinate space using Unscented Kalman filter with the ground plane information and human height estimation. Then, we identify the target person to be followed with the combination of Convolutional Channel Features (CCF) and online boosting. It allows us to take advantage of deep neural network-based feature representation while adapting the person classifier to a specific target person depending on the circumstances. The entire system can be run on a recent embedded computation board with a GPU (NVIDIA Jetson TX2), and it can easily be reproduced and reused on a new mobile robot platform. Through evaluations, we validated that the proposed method outperforms existing person identification methods for mobile robots. We applied the proposed method to a real person following robot, and it has been shown that CCF-based person identification realizes robust person following in both indoor and outdoor environments.", volume = 124, journal = "ROBOTICS AND AUTONOMOUS SYSTEMS", publisher = "Elsevier", year = 2020, title = "Monocular person tracking and identification with on-line deep feature selection for person following robots", author = "Koide, Kenji and Miura, Jun and Menegatti, Emanuele", id = "11577_3324498" }Cristina Forest, Gloria Beraldo, Roberto Mancin, Emanuele Menegatti and Agnese Suppiej. Maturational aspects of visual P300 in children: a research window for pediatric Brain Computer Interface (BCI)*. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). 2020, 451–455. URL, DOI BibTeX

@conference{10.1109/RO-MAN47096.2020.9223586, pages = "451--455", isbn = "978-1-7281-6075-7", doi = "10.1109/RO-MAN47096.2020.9223586", url = "https://ieeexplore.ieee.org/document/9223586/authors#authors", abstract = "The P300 is an endogenous event-related potential (ERP) involved in several cognitive processes, apparently preserved between adults and children. In the pediatric age it shows different age-related characteristics. Its application in Brain Computer Interface (BCI) pediatric research remains to date still unclear. The aim of this paper is to give an overview of the maturational aspects of the visual P300, that could be used for developing BCI paradigms in the pediatric age.", booktitle = "Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)", year = 2020, title = "Maturational aspects of visual P300 in children: a research window for pediatric Brain Computer Interface (BCI)*", author = "Forest, Cristina and Beraldo, Gloria and Mancin, Roberto and Menegatti, Emanuele and Suppiej, Agnese", id = "11577_3354665" }G Nicola, L Tagliapietra, E Tosello, N Navarin, S Ghidoni and E Menegatti. Robotic Object Sorting via Deep Reinforcement Learning: A generalized approach. In 29th IEEE International Conference on Robot and Human Interactive Communication, RO-MAN 2020. 2020, 1266–1273. DOI BibTeX