Projects

ProjectsSince 2004, IAS-Lab of the University of Padova realized that the tools and the competencies it has been developing in the field of autonomous robots and artificial intelligence could be applied to industrial robotics. At that time, well in advance on the current trend of Industry 4.0, IAS-Lab understood more intelligence and more perception were needed for industrial robot. For this reason, in 2005, IAS-Lab founded the spin-off company called IT+Robotics srl. The expertise of IAS-Lab on intelligent industrial robots started to grow year after year and IAS-lab was able to win many industrial research grants in the competitive calls of the European Commission.

Waste management is a huge problem. We produce more than 2.24 billion tonnes of solid waste, according to the World Bank estimates. And only 13.5% of them are recycled. We basically waste tons of raw materials which could be recovered to build a more sustainable development model. Why? There are many barriers, but the first step for recycling is sorting. Technologies used in current waste treatment plants cannot separate different types of materials with a good grade of purity and recovery. Human labor is still used to compensate, but it is almost impossible to handle such a huge quantity of waste. Thus, our group is working on a Robotic Waste Sorting System (RWSS) able to exploit the potentiality of industrial robots in waste sorting plants.

|

The Concept

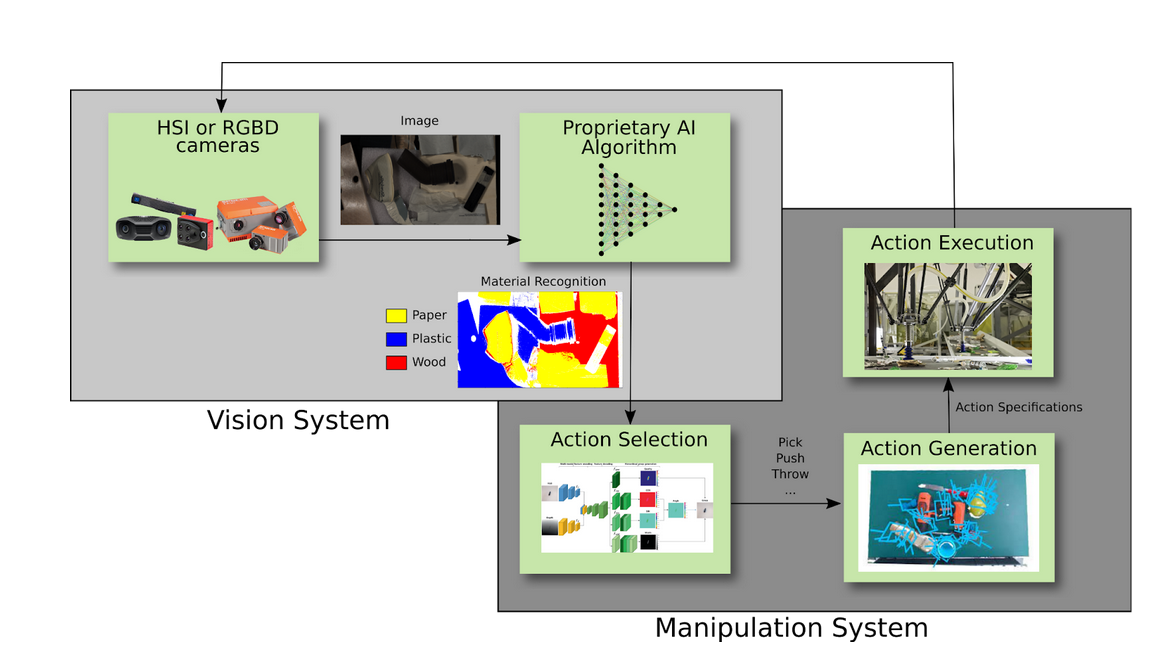

The system will be composed of a Vision System and a Manipulation System. The Vision System exploits state-of-the-art Deep Learning approaches to recognize different types of objects and materials in a cluttered stream through RGB-D and multispectral cameras. Since traditional hyper-spectral imaging used for material recognition outputs very redundant data, our aim is to develop algorithms able to use less rich data, without affecting performance and flexibility. Consequently, we target to lower hardware costs. We are also investing in data augmentation and synthetic data generation techniques in order to generate large amounts of training data with low efforts.

The Manipulation System will be charged to guide the robot during complex manipulation operations like grasp in a clutter, rummaging, tossing and so on in order to mimic human movement. In this direction, Reinforcement Learning and Self-Supervised Learning have shown promising results in laboratory settings. We are going to extend these approaches to work in real-world settings, e.g. considering objects with irregular geometries, entangled objects and so on, considering also Learning by Demonstration techniques.

RWSS aims to increase the quality and quantity of recovered material, boosting the efficiency and economic sustainability of the recycling process. With this project, we want to make an impact at social and economic levels in order to push our development model toward a more circular and sustainable scheme.

Awards and Prizes

Our project won a prize of 10.000 euros at SMACT call4ideas 2022 for the “Zero-waste & green transition”. We also participated in the “Start Cup Veneto 2022”, a regional business plan competition, obtaining 4th absolute prize (over more than 40 proposals) and a special mention which gave us the opportunity to compete in the “National Innovation Prize 2022”.

Contacts

If you are interested in collaborating within the project or inquiring for more information, please contact Dr. Bacchin Alberto at This email address is being protected from spambots. You need JavaScript enabled to view it.

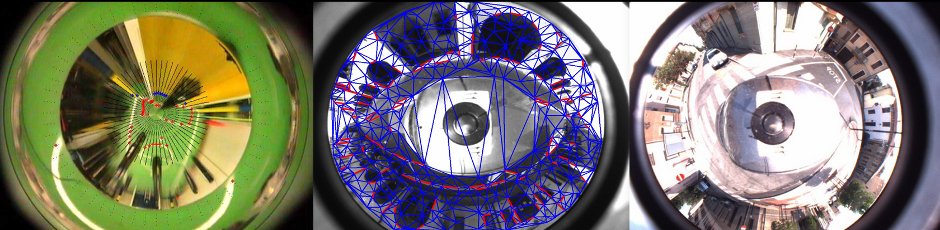

Underwater robots (UID, Underwater Inspection / Intervention Drones) represent essential tools for carrying out complex assembly and maintenance operations of plants in underwater environments. The growing interest in highly sustainable offshore energy hubs will make these tools even more important, since they can carry out heavy but necessary operations in underwater environments in a totally safe way. The SubEye project has the ambitious goal of providing the UIDs developed by the project partner Saipem with an advanced autonomous perception system capable of increasing their productivity and effectiveness.

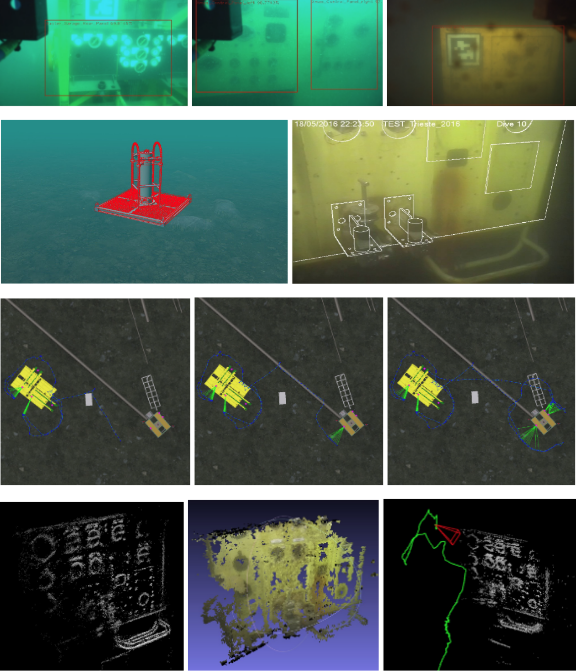

The SubEye project involves several perception modules (Fig. 2) used to detect, classify and estimate the position of objects of interest, to locate the UID within the working area and to reconstruct the 3D structure of the surrounding environment or of parts of interest. The obtained results will represent the backbone of a control system that will allow UIDs to perform complex operations on submarine infrastructures in total autonomy.

|

|

In the two images the FlatFish, indicated for inspection operations. Both are designed to operate in wire-guided mode (ROV) or in AUV mode(Autonomous Underwater Vehicles), to perform autonomous missions following a given plan. |

The hostility of the underwater environment, combined with the lack of any communication infrastructure and global positioning, introduce important scientific challenges. They will be addressed by introducing innovative solutions in the fields of computer vision, the generation of synthetic datasets and the integration of sensory information from heterogeneous sensors.

|

|

In the two images the FlatFish, indicated for inspection operations. Both are designed to operate in wire-guided mode (ROV) or in AUV mode(Autonomous Underwater Vehicles), to perform autonomous missions following a given plan. |

The advantages provided by the developed technologies are several: the higher productivity and the consequent savings will allow the use of UIDs in a massive way, increasing the possibilities of sustainable exploitation of the sea. In addition the UIDs equipped with the SubEye system will be designed to be used as offshore resident systems, so they don't require the continuous use of a dedicated support vessel, with undoubted advantages from an environmental and personnel safety point of view.

|

Objectives of the SubEye system: (First row) Detect and classify objects of interest; (Second row) Accurately estimate the location of infrastructures and parts of interest; (Third row) Locate the UID within the working area; (Fourth row) Reconstruct the 3D structure of the surrounding environment or of parts of interest. |

https://pabriksepatubandung.com/

their teaching practices and update their training methods and materials. The ENGINE project aims at supporting higher education institutions in sharing good practices which were initiated during the COVID-19 emergency in the Spring of 2020.

BrainGear

Brain-machine interfaces 2.0: A threefold symbiotic learning entity

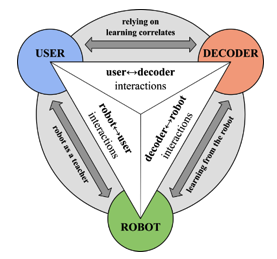

Brain-machine interfaces (BMIs) are systems able to translate human brain patterns into commands for robotic devices to restore the independence of users with severe motor disabilities. However, despite the flourishing of brain-actuated prototypes, after three decades of research, BMIs are still lacking practicable solutions for daily use by end-users and current research approaches that assume that BMI should be treated only as a decoder tool, have not been able to face this translational challenge.

BrainGear is two-year project, funded by the Department of Information Engineering of the Università degli Studi di Padova, that aims at radically departing the traditional approach by considering BMI as a multi-agent learning entity where the three actors involved—user, decoder, and robotic device—have to mutually learn from each other.

The objective of BrainGear is threefold:

1) To identify new neural correlates of user learning to make the BMI decoder follow and adapt to the user’s learning curve

2) To develop advanced shared-control algorithms that fuse the BMI decoder output and the robot intelligence to make the robot learn

3) To propose innovative interaction strategies where the robot acts as a teacher for the user’s training

The project will evaluate the existence of these learning interactions in an experimental scenario based on a brain-driven powered wheelchair.

BrainGear is led by Luca Tonin (This email address is being protected from spambots. You need JavaScript enabled to view it.) and it takes advantage from the multidisciplinary experience of the research group that harmoniously merges the expertise on brain-machine interfaces and advanced human-robot interactions of the IAS-Lab, the neurophysiological competence in clinical/physiological interpretation and in advanced EEG analysis of the NEUROMOVE-Rehab laboratory (Department of Neuroscience, Università degli Studi di Padova) and the know-how on mechanical design of rehabilitation devices of the Sports & Rehabilitation Engineering Lab (Department of Industrial Engineering, Università degli Studi di Padova).

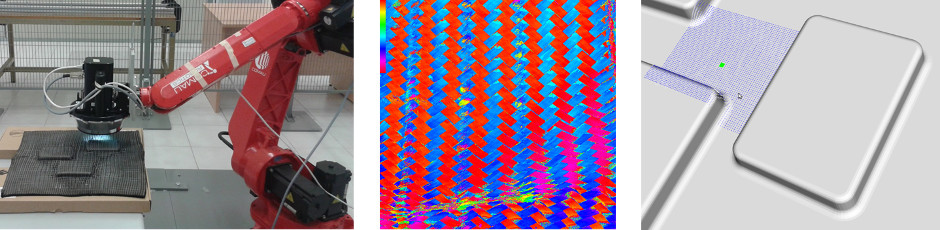

Draping is a process used for about 30% of all carbon fibre composite parts to place layers of carbon fiber fabric in a mould. During this process the flat fabric distorts to fit to the shape of the mould. Ensuring the accuracy of draping in terms of position and fiber orientation while avoiding wrinkles is a challenging task.

The DrapeBot project aims at human-robot collaborative draping. The robot is supposed to assist during the transport of the large material patches and to drape in areas of low curvature. The role of the human is to drape regions of high curvature.

To enable an efficient collaboration, DrapeBot develops a gripper system with integrated instrumentation, low-level control structures, and AI-driven human perception and task planning models. All of these developments aim at a smooth and efficient interaction between the human and the robot. Specific emphasis is put on trust and usability, due to the complexity of the task and the sizes of involved robots. The DrapeBot project runs over a period of four years from January 2021 to December 2024.

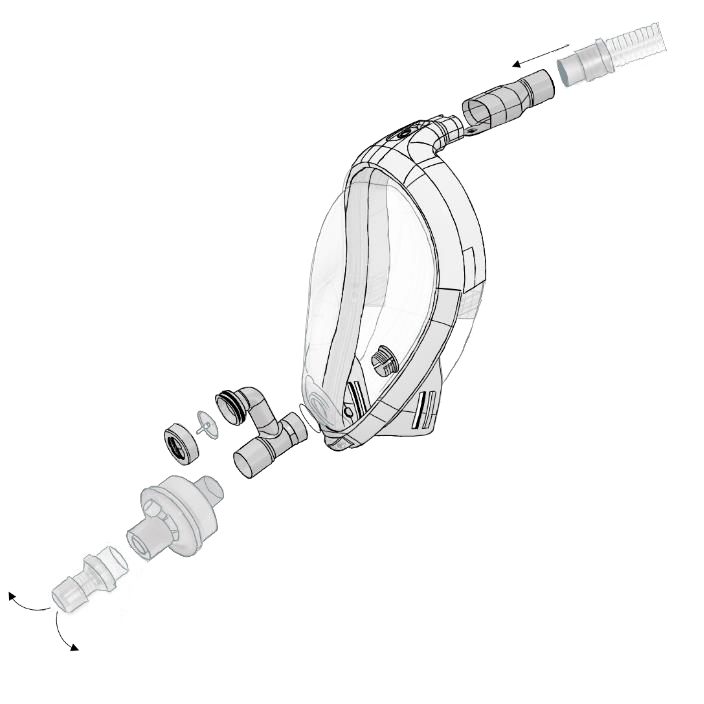

Fig. 1 - UNIPD-Mask

COVID-19 pandemic spread rapidly in Italy in early 2020, with over 200000 positive cases and more than 30000 deaths (Protezione Civile Update). Hospitals faced a shortage of Continuous Positive Airway Pressure (C- PAP) mechanical ventilation masks. This non-invasive treatment offers essential support in the treatment of patients with respiratory difficulties, such as COVID-19 ones, and can potentially avoid their admission to intensive care. Through an airtight connection with patients' airways, C-PAP devices create a constant positive pressure airflow while improving the patients breathing capacity. As a result, it is absolutely necessary to supply C-PAP devices, and this need led to the development of alternative solutions.

The Department of Information Engineering and the Department of Medicine of the University of Padova (Italy) developed UNIPD-Mask (see Fig. 1 and 2): a set of valves that allows converting EasyBreath, the snorkeling mask developed and marketed by Decathlon, into a C-PAP mask (patent pendant n. IT 1020200008305). This repository collects 3D models of the developed parts: https://github.com/iaslab-unipd/UNIPD-Mask.git. They are freely accessible and replicable.

Fig. 2 - UNIPD-Mask real picture

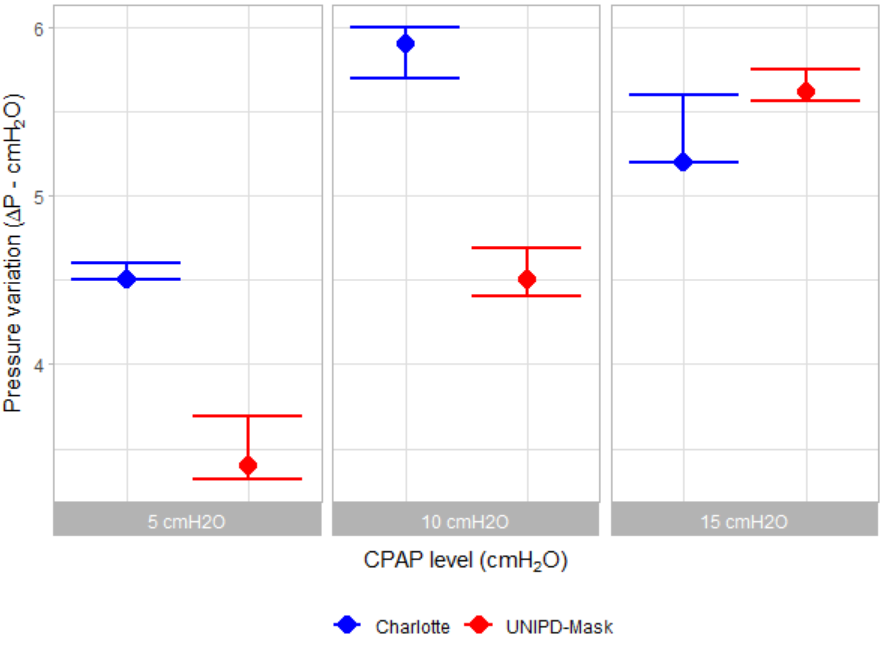

The proposed invention follows the idea of Isinnova SRL (Brescia, Italy) in the development of Charlotte and solves Charlotte problems related to the limited section of its air inlet and outlet ducts: during inhalation, systems equipped with Charlotte valve are not able to compensate the volume of air inhaled by the patient, resulting in a pressure drop. Moreover, during exhalation, this configuration does not allow for rapid air evacuation, causing a feeling of fatigue in the patient. UNIPD-Mask, using a double-channel for the incoming airflow, is instead able to provide a greater volume of air to the patient, without a drop in pressure inside the mask during inhalation (see Fig. 3).

Fig. 3 - Comparison between Charlotte (blue) and UNIPD-Mask (red) in terms of pressure variation

Finally, UNIPD-Mask adds an anti-suffocation valve which, in the event of an accidental interruption of air and oxygen flow, allows the patient to continue breathing. It is also possible to connect an outgoing air filter.

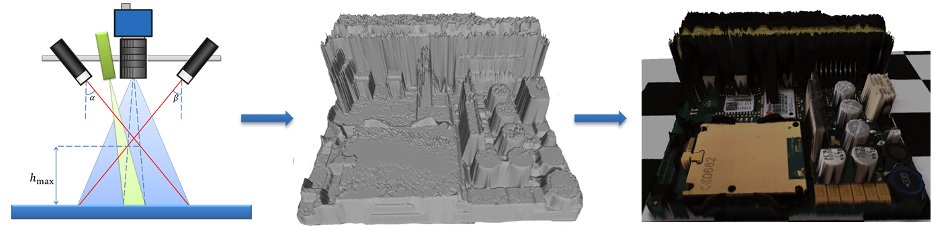

The objective of SPIRIT is to take the step from programming of robotic inspection tasks to configuring such tasks. This includes inspection tasks that use image-based sensors and require continuous motion to fully scan a part’s surface.

The project aims at:

• creating fully automatic offline path planning methods that ensure collision-free full coverage of the areas to be inspected on the part also for complex inspection processes. Instability is the maIn cause of falls for the elderly people, which increases the risk of fractures and therefore disability, with high health and social costs. Fall prevention is one of the targets of social and health policies for the promotion of active aging. The SoftAct project aims to meet this need by developing an innovative neuromuscular controller integrated in a "soft exoskeleton" (exosuit: soft wearable robot) for the lower limb, that can detect the loss of stability during walking or standing by the integration of biomechanical, cerebral and muscular signals. This information will activate compensation systems integrated in the soft exoskeleton to prevent possible fall, with an integrated feedback-feedforward system. The project will see the collaboration of two research groups: the Harvard University, with its experience in gait and muscle signal analysis (EMG), that created the prototype of the exosuit, and the University of Padua, with its strong know-how in the field of brain signal analysis (EEG) and intelligent software for robotics.

• developing reactive inline path planning that is able to automatically adjust to small changes in the environment, such as a different part shape or obstacles.

• a seamless mapping of image sensor data to a 3D model of the part.

• generating operational data of inspection robots in industrial environments. This will include data related to accuracy, cycle times and performance indicators of the integrated system.

The expected impact is:

• Reducing the engineering costs for setting up a robotic inspection task by 80%

• Creating a software framework that allows the shift from project-based, ad-hoc solutions to a product-based approach.

• Reducing the barrier when introducing automatic inspection systems by aiming at a return of investment of less than 2-3 years.

• Realizing a potential of several hundred additional robotic installations per year.

• Helping SMEs to reach out to worldwide markets by providing a proven framework for inspection robots.

The SPIRIT project had its first meeting in Steyr 27-28 February. The general meeting will be 24-26 July at the University of Padova.

Harvard and Padova Universities to prevent the risk of falling in the elderly

Instability is the main cause of falls for the elderly people, which increases the risk of fractures and therefore disability, with high health and social costs. Fall prevention is one of the targets of social and health policies for the promotion of the active aging.

The SoftAct project aims to meet this need by developing an innovative neuromuscolar controller integrated in a "soft exoskeleton" (exosuit: soft wearable robot) for the lower limb, that can detect the loss of stability during walking or standing by the integration of biomechanical, cerebral, and muscolar signals. This information will activate compensation systems integrated in the soft exoskeleton to prevent possible falls, with an integrated feedback-feedforward system.

The project will see the cooperation of two research groups: the Harvard University, which has an experience in gait and muscle signal analysis (EMG), and which created the prototype of the exosuit, and the University of Padova, with its deep knowledge in the field of brain signal analisys (EEG) and intelligent software for robotics. Prof. Alessandra De Felice of the Neuroscience Department is the coordinator of the project, and is working with the Department of Engineering Information and prof.Emanuele Menegatti.

A video explaining the work carried on by the "Robotics and Neuroscience" group of the University of Padova.

Click on this link for the same video but in the ITALIAN language.

eCraft2Learn (http://project.ecraft2learn.eu/) is a two-year Horizon2020 project started the 1st January 2017. The aim of the project is to reinforce personalized learning and teaching in STEAM education and to promote the development of 21st century skills and the employability of students aged 13-17 in the EU. For this purpose, eCraft2Learn will research, design and validate an ecosystem based on digital fabrication and make technologies for creating computer-supported artefacts.

Our role in ECraft2Learn project is the evaluation of suitable open source 3D printers and DIY electronics that will be integrated with the eCraft2Learn ecosystems through an unified user interface, and the planning of the unified ecosystem.