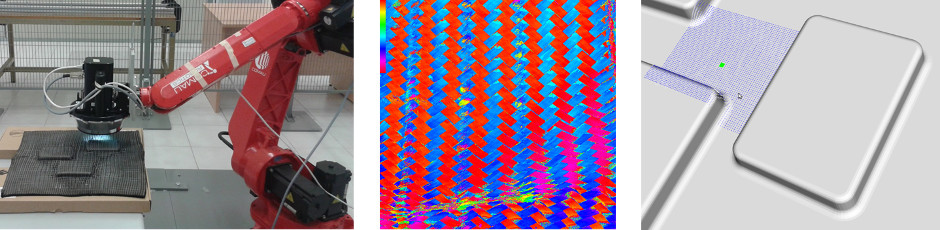

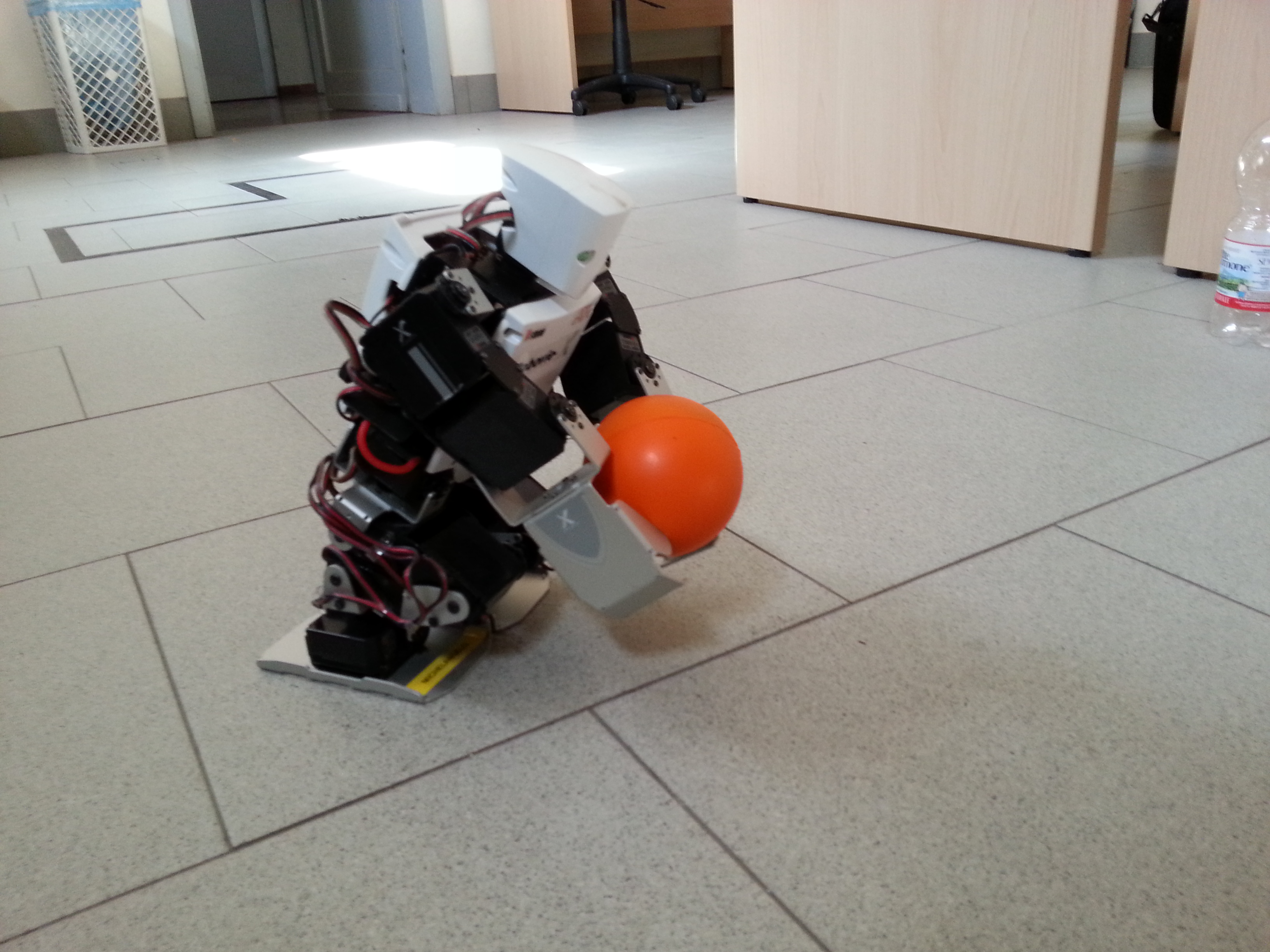

In this lab experience we'll introduce a humanoid robot, the Robovie-X, and the use of an XBOX 360 Kinect sensor to record some human movements that will be remapped into and reproduced by the robot. On one hand, we'll use a Kinect sensor for the data acquisition, on the other hand we'll use rviz and Gazebo tools to reproduce the human recorded poses through the robot.

Your objective is to make the robot crouch and grasp an object in a fixed position in front of him using your movement captured by the Kinect sensor.

You have to look at the robot stability in order to avoid robot falls during the task.

At the end of the experience...

Objectives

- Make the robot grasp the object without falling down

- Understand the basic ROS concepts (tf)

Plus

- Object Oriented (OO) approach

- Use several objects

Challenges

- Grasp a rigid rectangular box

What we want

- Code (BitBucket)

- Video (YouTube or BitBucket)

- Report (PDF using Moodle) containing

- The algorithm overview

- The procedures to let the robot being stable

- What are the main robot problems and why

- The reletionship between tfs description

Step 1: Setup and test Kinect and skeleton tracker

First of all, we have to download some packages.

cd ~/Workspace/Groovy/rosbuild_ws git clone git@bitbucket.org:iaslab-unipd/action_model.git rosws set action_model cd ~/Workspace/Groovy/catkin_ws/src git clone git@bitbucket.org:iaslab-unipd/magic_folder.git cd magic_folder git checkout dev_catkin cd ../.. catkin_make

Action Model won't work because of a bug in the NITE libraries, so we need to download the new ones (64-bit version) from the website.

cd ~/Workspace wget http://www.openni.org/wp-content/uploads/2012/12/NITE-Bin-Linux-x64-v1.5.2.21.tar.zip unzip NITE-Bin-Linux-x64-v1.5.2.21.tar.zip tar -xjvf NITE-Bin-Linux-x64-v1.5.2.21.tar.bz2 cd NITE-Bin-Dev-Linux-x64-v1.5.2.21 sudo ./uninstall.sh sudo ./install.sh

If you have Ubuntu 32-bit, try substituting "x64" with "x86".

You can now compile and run the skeletal tracker.

source ~/.bashrc rosmake action_model roslaunch action_model openni_tracker.launch

If everything is correct, you should get something like the following.

... frame id = openni_rgb_optical_frame rate = 30 file = /opt/ros/electric/stacks/unipd-ros-pkg/ActionModel/config/tracker.xml InitFromXml... ok Find depth generator... ok Find user generator... ok StartGenerating... ok ...

Now, if a person goes in front of the camera, a new user is identify. In order to calibrate the system and track the user with respect to the Kinect, the person have to move a little bit.

If all run correctly you see:

... New User 1 Calibration started for user 1 Calibration complete, start tracking user 1

Visualize the tracked person by starting the :

rosrun rviz rviz

and setup some basic properties:

- Fixed frame: /openni_camera

- Add: pointCloud2

- Topic: /camera/rgb/points

- ColorTransform: RGB8

- Style: points

- Add: TF

You should see something like this:

This package provides a set of tools for recording from and playing back to ROS topics. You will use it to record tf trasforms.

DONE!!

Step 2: Remapping of the human joints into the correspondent ones of the Robovie-X

tf is a package that lets the user keep track of multiple coordinate frames over time. tf maintains the relationship between coordinate frames in a tree structure buffered in time, and lets the user transform points, vectors, etc. between any two coordinate frames at any desired point in time, and allows you to ask questions like:

- Where was the head frame relative to the world frame, 5 seconds ago?

- What is the pose of the object in my gripper relative to my base?

- What is the current pose of the base frame in the map frame?

You can connect your movements to the Robovie-X ones using a tf listener.

Setup your workspace environment by downloading and installing the robovie_x package.

cd ~/Workspace/Groovy/rosbuild_ws git clone git@bitbucket.org:iaslab-unipd/robovie_x.git cd robovie_x git checkout dev_groovy mv robovie_x_teleoperation ~/Workspace/Groovy/catkin_ws/src mv robovie_x_model ~/Workspace/Groovy/catkin_ws/src cd ~/Workspace/Groovy/catkin_ws catkin_make

Then you can launch your packages.

roslaunch robovie_x_teleoperation robovie_x_teleoperation.launch

You have to become practical with the GUI, the robot model and to explore the mechanism control underlying the movement.

You have to control the Robovie-X model into RViz by using the joint_state_publisher plugin through the sensor_msgs/JointState:

Header header string[] name float64[] position float64[] velocity float64[] effort

A node for publishing joint angles, either through a GUI, or with default values. This package publishes sensor_msgs/JointState messages for a robot. The package reads the robot_description parameter, finds all of the non-fixed joints and publishes a JointState message with all those joints defined.

The joint angles published from joint_state_publisher will be listened by the Robovie-X controller to move each non-fixed joint.

DONE!!!